Non-line-of-sight imaging turns the corner to usability

The quest for optics to see around corners is an old one. Polish astronomer Johannes Hevelius made a primitive periscope in 1647, but not until 1854 did French inventor Hippolyte Marié-Davy invent a naval version. Trying to view an out-of-sight instrument dial in 1926 led RCA radio engineer Clarence W. Hansell to invent the flexible fiber-optic bundle for imaging. What’s now called non-line-of-sight (NLOS) imaging has become a hot topic in photonics, and shows up on the wish lists of military planners trying to defend against insurgents lurking in old buildings waiting to attack troops.

The modern quest is not to invent a new type of periscope, but to peer around corners into hidden areas not directly viewable with mirrors or other conventional optics that see only objects in the line of sight. The hot new approach that revived the quest is directing very short pulses of light at a “relay wall” visible from outside so the light is scattered through the hidden area and illuminates objects out of direct sight. Those hidden objects then scatter some light back to the relay wall, where it can be observed from outside. Using ultrashort pulses and detectors makes it possible to measure the time of flight of the scattered light, and those observations could yield enough information to map what is in the hidden area.

How it works

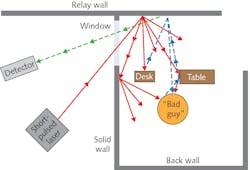

Like a lidar, the NLOS system starts by firing a short pulse of light, but the light is directed at a point on the relay wall which will scatter the light into the hidden zone. Triggering the pulse also triggers a detection system that watches another point on the wall for light scattered from the hidden area. Objects in the hidden zone then scatter the light from the relay wall; some of the scattered light goes directly to the wall, but other light scatters to other hidden objects, which scatter some light back to the wall. As in lidar, only a very small fraction of the photons in the original pulse wind up in the return signal sensed by the detector, which records their time of flight from the start of the pulse.

An imaging processor collects the return signals, their location on the wall, and their time of flight through the hidden area. Then, the processing system algorithms reconstruct the path of the light inside the hidden area, including where the light encountered objects that scattered it back to the relay wall. The processing system collects data returned from a series of pulses scattered by the relay wall into the hidden area to build up a digital 3D map of the collection of objects in the room. Think of the whole imaging system as a virtual camera that reconstructs the scene computationally; Figure 1 shows how the system works.

Ramesh Raskar’s Camera Culture group at the MIT Media Lab (Cambridge, MA) developed and published the concept in 2010.1 Andreas Velten, then at MIT and now a professor at the University of Wisconsin (Madison, WI), went on to demonstrate the concept. In 2012, he and colleagues reported using a streak camera to record the returned signal and process the signal streak-camera images to record a three-dimensional image of a small mannequin inside the hidden volume.2 The scattering process caused most of spatial information in the light to be lost, but capturing the ultrafast information on the time of flight allowed them to compensate for the loss.

Their demonstration was impressive, yet it was limited to collecting photons that had made only three scattering bounces: from the relay wall to the surface of the object facing the wall, from the object back to the wall, and from the wall to the streak camera. That could show only the three-dimensional side of the mannequin facing the relay wall. Some light scattered from the wall then was scattered off the mannequin or other objects in the hidden area, but the scattering process was so inefficient that few photons remained after their additional bounces off the other objects and their signal was too weak to detect. At the time, Velten expressed hope that better lasers and sensors and more powerful algorithms could recover enough information on reflectance, refraction, and scattering to allow reconstruction of hidden objects in the scene.

Velten’s report got wide attention at the time because it demonstrated an optical feat long regarded as extraordinary. However, like invisibility cloaking with metamaterials, it was an elegant demonstration of a headline-grabbing topic, but was far from real-world applications. Nonetheless, it was enough to stimulate more research, including a DARPA program called REVEAL, for Revolutionary Enhancement of Visibility by Exploiting Active Light fields, that was launched in 2015.3

A need for new technology

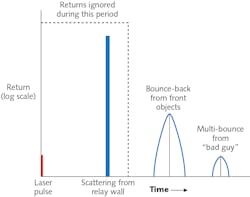

The DARPA program recognized a need for new technology to overcome the limitations of the initial demonstrations. In a review paper published in June 2020, Daniele Faccio of the University of Glasgow (Glasgow, Scotland), Velten, and Gordon Wetzstein of Stanford University (Stanford, CA) describe three developments needed for practical use.4 Perhaps the most obvious need was to fill in the picture behind the front layer of the scene that scattered the most light back to the relay wall. More scattering events could distribute light deeper into more hidden parts of the scene, but the strength of the return signal drops rapidly with the number of scatterings (see Fig. 2). Detecting such faint signals as well as brighter ones requires single-photon detectors that can be gated or have a high dynamic range.A second challenge is that deducing the location and three-dimensional configuration of a hidden object from intensity measurements is an “ill-posed” problem, one that lacks a single unique solution or has solutions that vary discontinuously as a function of input data. In this case, the key problem is inadequate sampling. A robust NLOS system could overcome that limitation, but would need to measure time with picosecond accuracy to have prior information on the imaged objects, or would have to offer an unconventional solution.

A final challenge is developing algorithms that can quickly and efficiently work backward from the image data collected by the sensor to calculate the full three-dimensional shape of a hidden object using only the memory of a single computer.

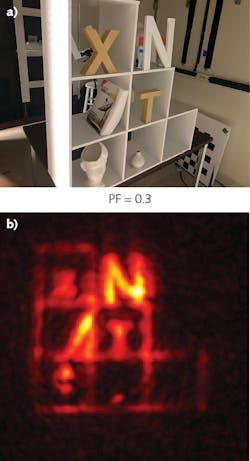

The paper reviews a number of recent studies and concludes that the most promising approach for NLOS imaging is time-resolved systems that combine ultrashort-pulse light sources with single-photon detectors. The preferred light sources are ultrashort laser pulses focused onto a relay wall so the light scatters into the hidden area. Light travels about 3 cm in 100 ps, so pulse durations must be no longer to get reasonable spatial and temporal resolution. Pulses of 100 fs would freeze time into shorter 30 μm slices in space. The laser beam scans the relay wall so scattered light from pulses radiates from points across the relay wall to illuminate the hidden area. Some scattered light bounces from other walls or parts of hidden objects to other points in the hidden area, so the light may take multiple bounces before a few photons from the original scattered pulse return to the relay wall, where they can be recorded by a detector or camera. Figure 3 compares the actual scene with the image calculated from the time of flight returns.Sensitive high-speed detectors or cameras also are essential for high resolution in time and space. Single-photon avalanche diodes (SPADs) have been used for many NLOS imaging experiments.5 SPADs are avalanche photodiodes operated at a high bias voltage, so detection of a single photon produces an avalanche of carriers and a high signal in Geiger mode. The detection system counts the time from emission of the laser pulse to the SPAD’s detection of a single-photon return, and photon arrivals are recorded on a histogram for analysis. For precise measurements, the detector is shut off for tens or hundreds of nanoseconds after detection of a photon, giving time to record multibounce returns. SPADs can detect up to 40% of incident photons.

Commercially, SPADs are available as single-element and array detectors, both of which can be used in NLOS imaging. Currently, most SPAD arrays are developed for lidar, and those designs need modification for best performance in NLOS imaging. Needed improvements include better time resolution, higher fill factors, gating out direct light, and more flexibility in photon time scales.

Processing techniques

The key information collected on the hidden scene is time-of-flight of multiply reflected photons relative to the laser pulse. Scattered photons that bounce only once, directly back to the relay wall, constitute most of the raw return signal, but are screened out by not starting detection until after they have reached the detector. The detector waits to start collecting data until only multiple reflected photons remain in flight to the sensor.

Further processing is much more of a challenge because it requires working backwards from the observed scattered light to create an image of the objects in the hidden scene that scattered the light. A variety of methods are in development. Some are heuristic approaches, which make and test approximations, trading off accuracy for speed of response. Other approaches include back-projection models, linear inverse methods, inverse light transport with nonlinear elements, models using wave optics rather than geometric optics, tracking of objects, and neural network approaches based on data collected in training sessions. Faccio et al.4 contains more details and references.

NLOS imaging at SPIE

During the SPIE Security + Defence Digital Forum (Sep. 20-25, 2020), Velten summarized recent results in an invited talk.6 “We can computationally recreate any imaging system your heart desires” by using a relay wall as the aperture of a virtual camera, he said. His group illuminated one point on the relay wall, and then scanned the SPAD detector across the rest of the relay wall to collect the response from the hidden zone. The researchers used a phasor phase technique to combine that data with a virtual illumination wave to get the returned phase, and used Rayleigh-Sommerfeld diffraction operators to compute the image by back-propagating in space and time.7

Frequency-wavelength (f-k) migration had been considered much faster than phasor back-projection for reconstruction of data from NLOS imaging, although not as flexible as the phasor approach.8 Velten says his phasor approach has now caught up in speed, needing only 2.8 s to calculate the fast Fourier transform in MATLAB. In addition, he says it needs less memory, is more robust to noise, and does not require confocal data, allowing them to use SPAD arrays rather than single-pixel detectors.

A video showed a thin sheet of light scattered from the relay wall propagating through the hidden zone, illuminating contents of a model office. The incoming light washed back over a chair in front of the room, reached the back wall, and then washed forward again over other objects in the office on its fourth and fifth bounces.

Velten also described another advance. Most NLOS imaging is based on flat, static Lambertian surfaces and only stationary objects in the hidden scene. Now, a project led by his Wisconsin colleague Marco La Manna has shown that relay surfaces don’t need to be flat or stationary. They designed a NLOS system with two separate detectors collecting time-of-flight data. One monitored the surface of the dynamic relay surface; the other monitoring light scattered onto the relay surface from the hidden scene. Their dynamic surface was simple—a pair of white curtains hanging on a frame, with a fan blowing to keep them in motion. When the system that monitored the moving screen was on and providing position data, they could use light scattered by the moving screen to image hidden stationary objects.9

Outlook

The images shown at SPIE and included in recent papers show more complex objects than in the early days. They are good enough to see limbs and poses of the human body, but not yet to recognize faces or hand gestures. But that’s good enough to encourage military sponsors, who want a system that can spot “bad guys” hiding in what look like empty buildings from safe standoff distances.

Velten is optimistic about progress. “I think we have all the theory in place to reconstruct and capture video-rate NLOS images from large stand-off distances of about one kilometer,” he says. “All the components have been demonstrated or will be very soon. After this, a lot of engineering and systems development are required. This could just be a matter of a year or two, depending on interest and funding.”

The key hardware advance needed is design of SPAD arrays dedicated for NLOS systems rather than for lidar. The time needed to reconstruct images will decrease with the increase in the number of SPAD pixels in arrays. Compact picosecond lasers also are needed, says Velten. “We can do real-time reconstructions on high-end hardware, but optimization to be able to do this on a more low-end system would be nice. At the current state, the laser probably accounts for roughly 90% of the cost and power consumption.”

Photorealistic NLOS images could be realized any time soon, although Velten says that would require major scientific advances. But it’s still impressive to be able to see around corners.

REFERENCES

1. A. Kirmani, T. Hutchison, J. Davis, and R. Raskar, Proc. IEEE Int. Conf. Comput. Vis. 2009, 159–166 (2009).

2. A. Velten et al., Nat. Commun., 3, 745 (2012).

3. See https://bit.ly/NLOSRef3.

4. D. Faccio, A. Velten, and G. Wetzstein, Nat. Rev. Phys., 2, 318 (Jun. 2020); https://doi.org/10.1038/s42254-020-0174-8.

5. See https://bit.ly/NLOSRef5.

6. A. Velten, Proc. SPIE, 11540,115400T (Sep. 20, 2020); doi:10.1117/12.2574737.

7. X. Liu, S. Bauer, and A. Velten, Nat. Commun., 11, 1645 (2020); https://doi.org/10.1038/s41467-020-15157-4.

8. M. Laurenzis, Proc. SPIE, 11540, 115400U (Sep. 20, 2020); doi:10.1117/12.2574198.

9. M. La Manna et al., Opt. Express, 28, 4, 5331–5339 (Feb. 17, 2020); https://doi.org/10.1364/oe.383586.

About the Author

Jeff Hecht

Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.