How thermal imaging can improve autonomous driving sensory systems

The field of autonomous driving has made considerable strides in the past decade with the advent of cost-effective sensors and improved computing capabilities. For these systems to be adopted by the masses, they must consistently perform better than human drivers in all driving conditions. While existing vehicle sensors offer important information about a vehicle’s surroundings, there have been several documented cases in which these sensors did not analyze their environment properly, leading to collisions.

Based on probability theory, the more information available to a sensory system, the more accurately it will be able to yield measurements. In the case of autonomous driving, this means more information will allow the driving environment to be perceived properly, leading to more educated driving decisions. Infrared (IR) cameras, or thermal imaging cameras, are not currently used in commercial autonomous driving sensory systems. These sensors can offer additional information to existing autonomous driving sensory systems, leading to improved performance in identification of objects within a vehicle’s surroundings and better driving reactions.

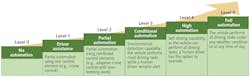

The Society of Automotive Engineers (SAE) has defined six levels of autonomous driving ranging from Level 0 to Level 5 (see Fig. 1).1 Currently, most mainstream manufacturers focus on Level 2 autonomy, which involves controls of two driving processes, such as adaptive cruise control with lane-keeping assist. To successfully progress to higher levels, a more reliable environmental perception system is required to cope with any weather condition or at any time of day.

Building a better perception system

Two things must occur to identify what objects are in a vehicle’s surroundings: the object must be detected, and also must be classified. Detection is the action of recognizing an object is present and classification is the process of identifying what exactly that object is. In order of importance for autonomous driving, it can be argued that detection is much more important than classification, as failing to notice an object while driving can lead to catastrophic consequences. Failing to correctly classify what an object is can lead to issues in traffic modeling, which in turn impacts proper vehicle reactions.

To perform detection and classification, vehicles are equipped with a variety of sensors that provide a range of different information to the vehicle. The current suite of sensors used on commercial vehicles with autonomous features includes ultrasonic, radar, vision cameras, and lidar sensors. All of these sensors offer their own unique information on a vehicle’s surroundings. Lidar generates 3D coordinate point-cloud data of the vehicle’s surroundings, radar detects an object’s relative location and velocity if it is in the radar’s field of view, cameras give 2D images with high resolution, and ultrasonic sensors can detect the distance to objects in close vicinity to them—generally up to 10 m. Vision cameras offer more detail on objects in a vehicle’s vicinity, making them better at classifying objects than lower-resolution sensors that may only return several data points on an object.

Tesla’s “Autopilot”—a controversial autonomous driving feature2—uses a combination of only vision cameras, radar, and ultrasonic sensors.3 Many Tesla vehicles also collect data that can later be used to refine and improve object identification.4 While these sensors and trained algorithms perform well in many situations, infinite amounts of sensor data cannot replace alternative information that is provided by other sensors.

So where do IR sensors fit into the suite of autonomous driving sensors? High resolutions and low costs of vision cameras make them ideal candidates for performing detection and classification in autonomous driving applications, but what happens when these sensors cannot be used? Vision cameras by nature cannot provide substantial sensory information in low light or low visibility (such as nighttime and fog). Half of pedestrian-related accidents happen in the nighttime, despite traffic being significantly less during these hours,5 showing that nighttime detection is critical to preventing dangerous pedestrian collisions. Thermal imaging does not rely on visible light, and as such works just as well—and in some cases better—in the nighttime as it does in the daytime. Thermal imaging also performs well when the object of interest has substantial temperature deviations from the surrounding environment, making them ideal for detecting living things and high temperature objects like vehicle rims and exhaust pipes (see Fig. 2).Detecting and classifying objects using IR sensors

To test the potential of thermal cameras for autonomous driving, object detection and classification through black-and-white thermal imaging was performed by implementing image-detection algorithms traditionally used on colored vision camera images. This involved collecting and labeling a thermal image dataset and training convolutional neural networks (CNNs) on this data. Figure 3 shows an overview of the CNN architecture for image classification. A total of 7875 images were labeled with a combined 21,600 objects in them. In terms of deep CNN training, this is a small amount of data. The data was collected from a variety of nighttime and cloudy/rainy environments.6, 7 The networks trained were the YOLOv2 (23 convolution layers) and tiny YOLOv2 (9 convolution layers) networks.Seeking improvement

The factors affecting the detection and classification performance include the depth of the network (deeper network has more parameters) and whether the network was pretrained or trained from scratch. As expected, deeper CNNs took longer to train, but lead to higher performance on recall and precision metrics. To train the black-and-white thermal images efficiently without the need to alter existing CNN architectures and algorithms, the images were converted to RGB images. This allowed for training a pretrained network and directly comparing these results to a network trained from scratch, where all network weights were initialized without former input.

Somewhat surprisingly, the networks that had been previously trained on vision camera images and then fine-tuned for thermal images outperformed the networks trained solely on the thermal images from the labeled datasets. This indicates two things: 1) a CNN’s performance trained on limited thermal data can be improved by first augmenting training with readily available vision images, and 2) vision camera images and thermal images may be able to supplement each other.

Looking forward

While recent developments in autonomous driving have been promising, a lot more work needs to be done before commercial vehicles with Level 5 autonomy will become a reality. With an ever-increasing amount of data, existing sensory suites in autonomous vehicles appear to be on the right track for performance in ideal daytime conditions, but driving conditions are not always ideal and only driving in the daytime will never be an acceptable long-term solution. Thermal imaging has shown promising results for detecting warm-bodied objects in driving scenarios based on limited available data. While its relative sensor cost is high, thermal imaging can provide invaluable information to improve existing sensory systems used in autonomous driving. Thermal imaging can never be used as a standalone sensor for detecting all objects of interest for autonomous driving purposes, but its ability to improve existing sensory systems is undeniable.

ACKNOWLEDGEMENT

The research discussed in this article was performed during the course of a two-year thesis project in the Centre for Mechatronics and Hybrid Technology at McMaster University in Hamilton, ON, Canada.

REFERENCES

1. “J3016 - (R) Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles,” SAE International (2018).

2. See https://cnb.cx/2Rxx7je.

3. See http://bit.ly/TeslaRef.

4. See http://bit.ly/ElectrekRef.

5. J. Baek et al., Sensors, 17, 1850, 1–20 (2017).

6. B. Miethig, A. Liu, S. Habibi, and M. v. Mohrenschildt, Proc. ITEC, 1–5 (2019); doi:10.1109/itec.2019.8790493.

7. See http://bit.ly/McMasterRef.

About the Author

Ben Miethig

Product Engineering Lead, Longan Vision

Ben Miethig is Product Engineering Lead at Longan Vision (Hamilton, ON, Canada); he is also affiliated with McMaster University – Centre for Mechatronics and Hybrid Technologies (CMHT; also in Hamilton, ON, Canada).

Elliot Yixin Huangfu

Research Assistant, McMaster University

Elliot Yixin Huangfu is affiliated with McMaster University – Centre for Mechatronics and Hybrid Technologies (CMHT; Hamilton, ON, Canada).