INFRARED DETECTORS: EMCCD and sCMOS imaging systems confront color night vision

AUSTIN RICHARDS and MIKE RICHARDSON

Color night vision (CNV) is a shorthand term for electronic color imaging in the visible band of the electromagnetic spectrum in low-light conditions. Successfully imaging in these conditions can be challenging since nighttime visible-light illumination levels in urban and suburban areas can be more than a million times lower than during the day.

The goal of CNV imaging is to present video to the operator in the actual colors one would see during the daytime. Security personnel are often trying to identify unknown objects of interest in low-light scenes. This color information is readily available in most daytime conditions (especially at ranges less than 1 km) but disappears rapidly at dusk with typical color surveillance cameras.

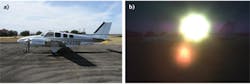

Recent developments in visible-light imaging technology, including electron-multiplying charge-coupled device (EMCCD) and scientific complementary metal-oxide semiconductor (sCMOS) sensors, enable CNV in illumination conditions where the unaided human eye is color blind. For example, a rainbow can be imaged in full color at video frame rates (30 Hz) by the light of the full moon alone (see Fig. 1). While the rainbow is visible to the dark-adapted eye of the observer, no color information is apparent and instead, the "moonbow" just has a silvery sheen. In another example, images of a vehicle in deep-twilight conditions are dramatically different for a conventional CCD imager and an sCMOS imager despite using the same zoom lens type with identical settings (see Fig. 2).Low-light color imaging options

Nighttime security imaging at ranges from 0 to 500 m is typically performed by cameras equipped with conventional CCD sensors, zoom lenses, and ambient artificial lighting, such as sodium lighting in a parking lot. Under these conditions, if color information is required by the operator, the ambient artificial lighting must be quite bright. This can incur significant costs in terms of electricity used to operate that lighting and may not be possible due to light-pollution considerations. The other approach to capture low-light imagery with high color fidelity is to make the system much more sensitive to light.

Typically, color reproduction has not been a requirement of nighttime security imaging systems because the cameras weren't sensitive enough to the available visible light alone. Instead, the cameras could be reconfigured for maximum sensitivity in the near-infrared (NIR) band to take advantage of NIR illuminators or the ambient NIR illumination produced by many man-made light sources. During the day, the cameras' natural NIR response is suppressed by an optical filter in the image path, but when this filter is retracted, the camera switches to a black-and-white video mode, because the addition of the NIR light will desaturate the color of the image severely such that most color information is lost. The use of this additional ambient NIR illumination can also cause very soft-focus images unless special wide spectrum-corrected lenses are used.

In very dark conditions, such as in rural areas with no moonlight or when using a long-focal-length, high f/# lens, a color camera can fail to achieve adequate signal-to-noise ratio (SNR). The only solutions remaining are to increase sensitivity through increased sensor integration time, pixel binning, or alternate sensor technologies.

Conventional CCD cameras for closed-circuit television (CCTV) applications can often be set for multiframe integration, but this comes with a cost in terms of being able to image moving objects. In addition, cameras with long-focal-length lenses on pan/tilt systems are very susceptible to wind-induced motion blurring. These factors often drive the system engineer to specify 33 ms as a practical upper limit to integration time, which corresponds to a 30 Hz frame rate.

Binning is an alternate approach by which the captured charge from multiple adjacent pixels is added together to effectively increase the size and thus the sensitivity of each pixel but at the cost of resolution. Binning is not available in all cameras, and different types of binning can be more or less effective in increasing the camera sensitivity. If increased integration times or binning are not available due to system constraints, consideration of alternate sensor technologies is in order.

Cameras equipped with EMCCD sensors attempt to overcome these challenges by adding gain to the signal captured by the sensor in low-light scenes. This is accomplished by a very low-noise impact-ionization amplifier stage between the pixel and the output of the sensor. Good low-light results require high levels of gain to increase sensitivity, but high gain can result in image artifacts in scenes with both bright and dark objects.

An alternate sensor technology for low-light color imaging is sCMOS. This sensor is based on a very different architecture than CCD cameras and focuses on ultralow read noise to achieve a good SNR instead of using gain to increase the signal level. Here, a dual-gain structure simultaneously reads each pixel at both a low gain and a high gain level. The camera can then determine which gain should be used for each pixel and construct a final image that captures low-light detail even in the presence of bright objects.

Lens-speed dynamics

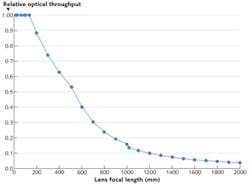

Another scenario that drives system engineers to use novel sensor technologies is long-range surveillance imaging, where long-focal-length lenses can severely limit the light signal reaching the sensor and where ambient lighting is difficult or impossible to control. In a shopping center parking lot of several hundred meters in size, one can light the whole area. But at an airport or in harbors, items of interest may be kilometers away from the camera system and illumination can be highly variable, depending on factors that include moonlight or the location of the object relative to man-made light sources. Zoom lenses for this application class are typically 1000 mm or more in focal length and will have variable f/#s that increase with zoom and are typically in excess of f/10 at full zoom. These high f/#s impose severe restrictions on light reaching the sensor relative to short- or medium-range imaging systems.

Consider the Fujinon (Saitama, Japan) D60x16.7 autofocus lens, which changes from an aperture of f/3.5 to f/18 over its full zoom range. This capability is driven entirely by the fixed diameter of the objective lens—not by the lens iris. The consequence is that the camera image will tend to get progressively darker and noisier as the operator zooms in on a target in low-light conditions; that is, relative optical throughput (defined as the square of the lowest f/#—which is 3.5—divided by the square of the f/#) drops from 1 to 0.04 over the zoom range (see Fig. 3). The image will get approximately 26 times darker when zooming from the widest to the narrowest field of view for a fixed illumination and fixed camera sensitivity.In a surveillance system with a low-light imager and zoom lens, the operator will usually get the best results by operating with a wide field of view to acquire targets of interest. At wide fields of view, the system sensitivity will be the highest, but the spatial resolution will be the lowest. When an item of interest is detected, the operator may choose to narrow the field of view of the camera system in order to gain more information, such as the numbers on a license plate. Due to the tradeoff of available light vs. resolution, it is advisable to use the lowest zoom required to resolve the object of interest in a particular low-light scene.

Scenes with very bright and very dark regions

Nighttime imaging is not only about seeing in very dark conditions. Often there are very bright sources of light within nighttime scenes of interest that can produce undesirable effects, making it difficult or impossible to discern important details. A person sitting in a car with the headlights on may be impossible to identify through the glare, even though there might be sufficient illumination on the person's face to get a clear image in the absence of the headlights.

The contrast in a scene may be just as important as the absolute light level. The ratio of the brightest nonsaturated object (where saturation is defined as the maximum light signal that can be output from a pixel) to the darkest object that can be discerned through the noise in a single image is defined as the intrascene dynamic range. Creating imaging systems that can deal with scenes exhibiting high intrascene dynamic range poses a number of unique challenges that can influence both camera and lens selection. In a situation where buildings, vehicles, and figures on a hillside could be of interest, but it might also be desirable to resolve markings on an aircraft in the air above the hill, the intrascene dynamic range can easily exceed 10000:1. Both the optics and the image sensor used in the system present their own limitations on what system dynamic range can be achieved and each component must be addressed separately; a limitation on dynamic range imposed by the lens cannot be solved by using a better camera, and vice versa.

For the lens, veiling glare is the primary factor in degrading system performance (see Fig. 5). This effect is caused by reflections of a bright source of light within the field of view off the various glass surfaces in the lens assembly. These reflections can obscure important details in the scene, especially in dark or shadowy areas. High-quality optical glass and coatings can help mitigate this effect.The type of image sensor also imposes limitations on the intrascene dynamic range. Conventional CCD cameras and EMCCDs are often limited to intrascene ratios of 1000:1 or less simply because of dynamic range-the relationship of their noise floor to their saturation level. Image intensifiers are quite a bit worse, with ratios as low as 10:1 under some conditions. Recent sCMOS cameras are typically capable of dynamic ranges in excess of 10,000:1, which makes them eminently suitable for high-dynamic-range scenes.

Beyond the native dynamic range of the camera, sensor blooming and other saturation artifacts also come into play. In EMCCDs and conventional CCDs, blooming is often seen as a vertical streak coming from a bright object in the scene. Elimination of blooming usually requires the lens to be stopped down or the integration time to be shortened such that the object causing the blooming is reduced in intensity to a level that the camera can handle. In turn, this reduces the intensity of the rest of the image by the same ratio, which causes dark objects that could be successfully imaged with higher integration times or a wider aperture to fall below the noise floor of the camera and be lost.

Cameras using sCMOS sensors typically don't suffer from blooming but can exhibit an effect where a very bright object in the scene can appear black. This artifact is referred to as "black sun" and must be addressed in the same way as blooming; however, this phenomenon typically requires much higher light levels to appear and thus limits the system performance much less than blooming in other sensor types.

Austin Richards is a senior research scientist and Mike Richardson is a systems engineer at FLIR Commercial Systems, 27700 SW Parkway Ave., Wilsonville, OR 97070; e-mail: [email protected]; www.flir.com.