New technical conference probes quantum noise for new physics

It has long been recognized that high-sensitivity photodetection systems are limited by quantum-mechanical noise, but virtually all photodetection sensitivity calculations—whether for fiberoptic or free-space optical communications, or for interferometric precision measurements—still rely on semiclassical, shot-noise theory in which light is treated as a classical electromagnetic wave and the fundamental source of noise is the random creation of discrete charge carriers in response to illumination of the detector's photosensitive region.

As it turns out, light produced by lasers, LEDs, light bulbs, and nature just happens to fall within a very broad class of appropriate quantum states in which the shot-noise calculations are in perfect quantitative agreement with the rigorous quantum theory of photodetection. However, nonclassical light sources were discovered in the 1970s for which this was not the case, according to Jeffrey Shapiro, at the Massachusetts Institute of Technology (MIT; Cambridge, MA).1 In addition, revolutionary growth in the physical sciences has almost always accompanied more precise investigation into the measurement limits posed by noise.

In a brief opening introduction to the first annual SPIE symposium on Fluctuations and Noise in Santa Fe, NM, in June, Laszlo Kish, of Texas A&M (College Station, TX) who cochaired the symposium and proposed the idea to SPIE in the first place, quoted Einstein, " 'Nature shows us only the tail of the lion.' But I do not doubt that the lion belongs to it even though he cannot at once reveal himself because of his enormous size. In the history of science, noise phenomena often were tails of unknown animals, and the explanations came about by giving up the old physics," Kish said. "The emergence of Planck's constant and quantum physics is probably the best known example. Decades later the explanation of thermal radiation (the thermal noise of the electromagnetic field) also came about by breaking the rules of classical physics. Now again we are in a kind of revolution due in large part to the emergence of microelectronics and nanoelectronics where noise appears to be the ultimate limit."

The first plenary address by Marlan Scully, coauthor of the Scully-Lamb quantum laser theory and director of theoretical physics and quantum studies programs at Texas A&M, seemed to set a theme for the entire symposium with the proposition that "noise is news." And Eugene Stanley, director of the Center for Polymer Studies at Boston University (Boston, MA) followed with a hint of the potential breadth of applications by describing a highly accurate method of predicting stock market fluctuations based on econophysics, in which the statistical rigor of quantum mechanical studies is applied to what Stanley described as the comparatively imprecise science currently practiced by traditional economists.2

Squeezed light

Of the four conferences in the fluctuations and noise symposium, the one on photonics and quantum optics focused on topics such as quantum noise in lasers and detectors, generation of nonclassical light beams, quantum cryptography, quantum teleportation, and linear optics quantum computing.

"The real excitement about these nonclassical states—states with definite numbers of photons, squeezed states which manipulate the noise in the quadrature components of the electromagnetic field at constant uncertainty product, and entangled states in which two quantum light fields have correlations that exceed the bounds set by classical physics—is that they open up new possibilities for increased signal-to-noise ratio in precision measurements and for quantum nondemolition measurements," said Shapiro, who cochaired the photonics and quantum optics conference. "Although significantly nonclassical behavior has been seen with such states, they are still difficult to generate and to use, and have not found widespread application.

"The latest noise-related excitement in quantum optics, however, comes from the burgeoning field of quantum information," he said. "Quantum key distribution systems, whose security relies on the impossibility of measuring a quantum system without disturbing it; quantum teleportation systems, in which the state of a two-level quantum system can be transmitted over long distances by exploiting the purely quantum phenomenon of entanglement; and quantum computation, in which entanglement and superposition enable computational algorithms, are all areas of active research."

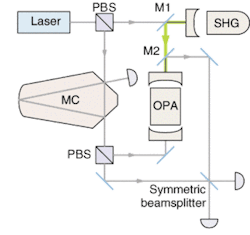

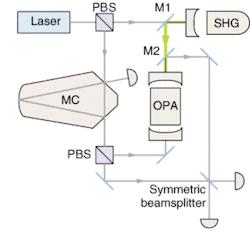

A keynote address by Ping Lam from the Australian National University (Canberra, Australia) described the use of optical parametric oscillators for optical squeezing to achieve measurement resolution better than the Heisenberg uncertainty limit in temporal, polarization and spatial domains for potential applications such as, improving interferometric sensitivity, demonstrating quantum teleportation, gravitational wave detection, and precisely determining displacements such as in the cantilever of an atomic-force microscope (see figure).3

Along these lines, plenary speaker Daniel Rugar described work at IBM Almaden Research Center (San Jose, CA) on extending the sensitivity of magnetic-resonance-force microscopy to single-spin quantum readouts.4 Invited speaker Nergis Mavalvala from MIT gave one of several talks at the conference on the role of squeezed light in overcoming the quantum-noise limitation due primarily to increased circulating laser power in advanced-gravity wave detectors.5

The symposium, which had about 300 attendees and strong international participation, and which is scheduled to be held in Budapest next year, closed with an entertaining and often tongue-in-cheek debate as to whether the idea of a quantum computer is simply a myth or will actually become a reality. The general thrust of both pro and con debaters seemed to be that a special-purpose quantum computer that would be able to handle a 1000-bit input would require a significant technological undertaking possibly on the order of 20 to 30 years, and that a general-purpose quantum computer that could sit on a desktop in every home and run Microsoft Windows would not only be extremely difficult to build, but would probably not be needed.

The potential factoring capabilities of quantum computers are currently of strategic importance, and a 15-bit device already exists in Japan for factoring up to 25-bit numbers. But Julio Gea-Banacloche, of the University of Arkansas (Fayetteville, AK) and editor for quantum computing at Physical Review A, raised a concern that those strategic interests might not persist strongly enough for the next 20 to 30 years that it would be necessary to build a 1000-bit machine, although smaller devices are likely to be built for basic science purposes.

REFERENCES

- J. H. Shapiro, Proc. SPIE 5111, 383 (June 2003).

- X. Gabalx, P. Gopikrishnan, V. Plerou, H. E. Stanley, Nature 423, 267 (May 15, 2003).

- T. Symul, W. P. Bowen, R. Schnabel et al., Proc. SPIE 5111, 67 (June 2003).

- Ibid. p. xiii.

- Ibid. p. 23.