For the modern-day robot, seeing is nearing believing

It is hard not to be intrigued by the notion of a machine that can walk, talk, think, listen, work, and see just like a human. Scientists, engineers, and hobbyists have been working for decades to build robots that can mimic human behavior and, in some cases, improve upon it.

The word "robot" comes from the Czechoslovakian word "robota," which means "tedious labor." And for the most part robots as we know them today are used primarily in industrial applications, replacing humans in tedious or potentially dangerous tasks on assembly lines, in foundries and nuclear plants, on the battlefield, and in outer space. General Motors put the first industrial robot into service in 1962; today robots and robotic systems can be found in many automated assembly and production applications worldwide, as well as on the Mars Rover (see Laser Focus World, August 2003, p. 71). Simple robotic systems have also made their way into consumer electronics, with mechanical dogs and beetle-like vacuum cleaners available in department stores and via infomercials.

But for most robot aficionados, this is only the tip of the iceberg. They continue to envision a world in which robots function more like people—where the machine not only mimics simple human tasks but can perceive, assess, navigate, and manipulate its environment much like we do. Not surprisingly, this is easier said than done, especially when it comes to imbuing electronic systems with the five basic senses.

Mapping the environment

Much of what a robot can do begins with what it can see. It exists in relation to its environment, so it must know what is in that environment, and where, in order to move through it and perform its appointed tasks. Vision and sensing systems have long been a fundamental part of robotic development, especially as these systems relate to motion control and drive methods. The traditional approach has been to outfit the machine with various combinations of cameras, sensors, and computers to gather, analyze, and reconstruct images of its environment.

More recently, digital cameras, LEDs, CCDs, laser rangefinders, and sophisticated computer algorithms are enabling robots to use noncontact object sensing to navigate their environment, detecting and avoiding obstacles along the way and building an internal map from the experience (see "Building a better optical sensor," p. 81). The Mars Rover, for example, is designed to autonomously travel up to 100 m/day, traversing unknown territory and acquiring images and data about the planet's climate and terrain.1 The Rover's vision system is based on nine cameras, each with a 1024 × 1024-pixel frame transfer CCD and various optics designs; the navigation cameras use stereo vision to handle traverse panning and general imaging, while the wide-angle hazard-avoidance cameras generate range maps of the surrounding area for obstacle detection and avoidance.

Relatively speaking, the Rover system is simplistic, in part because it must be failsafe. More sophisticated robot vision and sensing systems are under development worldwide. The Carnegie Mellon Robotics Institute (Pittsburgh, PA), established in 1965, is at the forefront of three-dimensional (3-D) perception and mapping for free-ranging robots. Hans Moravec, principal research scientist at the institute, has been building robots for 40 years; today his machines navigate with stereoscopic cameras, a light projection source, and a 3-D grid comprising millions of digital cells that image the placement of objects and apply a geometric equation to maneuver around them (see Fig. 1).

Originally, Moravec and his colleagues built stereo-vision-guided robots that drove through clutter by tracking a few dozen features, presumed to be parts of objects, in sensor-based camera images. They developed the 3-D evidence-grid approach in 1983; instead of determining the location of objects, the grid method accumulates the "objectness" of locations arranged in a grid, slowly resolving ambiguities about which places are empty and which are filled. During mapping, a sequence of stereo image pairs is processed. The results accumulate in a three-dimensional array—the evidence grid-whose cells represent regions of space.

"We are not reconstructing an image but reconstructing a 3-D grid that provides positive and negative evidence of whether a space is occupied," Moravec said. "Each stereoscopic glimpse produces about 10,000 rays, and there are four such glimpses looking in the four compass directions, so you get about 40,000 rays at each point. In a typical hallway journey, there are 25 places at which the robot stops. From this data, the robot produces a 3-D probability grid. And this is good enough now that the robot can figure out where it is and where the obstacles are and even what some of the obstacles are."

Moravec's robots and their vision systems have taken on various configurations over the years; he is currently combining textured light, trinocular stereoscopic vision with 3-D grids, color projection learning, and stereoscopic matching to make navigation-ready maps of a test area. In addition to the sensors and cameras, a critical component of his approach is the projection light source. He has experimented with laser pattern generators and mirror balls to achieve the necessary texturing of an object's surface. "You can't do stereoscopic vision to blank surfaces," he says. But he believes that high-power IR LEDs with narrow-beam optics will likely prove optimum.

Selective-attention modeling

Some researchers are not convinced that environmental mapping is the best approach to modern-day robot vision. An emerging discipline called neuromorphology combines artificial intelligence, biology, digital cameras, and optical engineering to help robots assess their environments more like humans and animals do. Neuromorphic engineers look at biological structures such as the retina and cortex and devise analog-based chips that contain neurons and a primitive rendition of brain chemistries. The result is smaller, smarter, and more energy-efficient vision systems that could change the way robots function.

"It used to be that people thought the way computer and robot vision should be done is to start with an image and then have a full reconstruction of what it was," said Laurent Itti, assistant professor of computer science at the University of Southern California (Los Angeles, CA) and a leading neuromorphology researcher. "But it turns out that this is an impossible task. There is an infinite number of 3-D models that could generate an array of pixels as you see it with a camera. Just by looking at a picture you cannot know what the world is from a computer perspective. The human brain infers a lot of things."

Researchers at the Center for Neuromorphic Systems Engineering at the California Institute of Technology (CalTech; Pasadena, CA) have developed a vision system modeled after that of the tropical jumping spider.2 The spider has only 800 photoreceptors in each of its retinas but can see as well as humans, who have 137 million photoreceptors in each eye. The key is "vibrating retinas" that allow the photoreceptors to pinpoint where changes in light intensity occur. The image sensor system built at CalTech is a CMOS chip with photoreceptors arranged in a 32 × 32-pixel array attached to a metal frame with a lens fixed at one end. The unit is mounted on springs, which causes it to shake each time the robot moves. Each shake shifts the chips' pixels to cover more area. A signal processor takes each pixel's output and combines them, factoring in their changing positions over time to build an image. Because preprocessing is done on the chip, smaller microprocessors that are less power hungry are required, which should lead to lighter robots requiring less electricity.

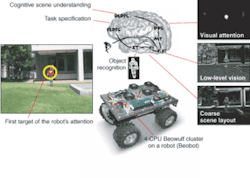

Itti and his colleagues at USC are working with an even more sophisticated approach called selective-attention modeling, which attempts to program robots to perceive and critically evaluate scenes like humans do.3 By equipping robots with pan-tilt cameras for eyes and developing algorithms that give them the ability to pick out unusual objects, the robots can construct maps on the basis of local contrasts in features such as color, edges, orientation, light intensity, and motion (see Fig. 2). Current experiments in Itti's lab are focusing on what objects within an image attract the human eye, and why.

FIGURE 2. Neuromorphic-based algorithms are enabling robots to learn about and navigate an environment by deciding what the important objects are. "Beobots" developed at the USC use generic vision modules inspired by the neurobiology of the primate visual system. These include modules for low-level vision, which feed into three parallel streams: one concerned with recognizing attended objects (center), one concerned with directing attention toward interesting objects (right, top), and one concerned with computing a coarse layout of the scene (right, bottom). Task definitions modulate these processing streams from the top down and shape the form taken by the cognitive scene-understanding module, which will decide on the course of action to be taken by the robot.

"Our motivation was not to build a robot but to understand how vision works in humans," Itti said. "Instead of looking at what does the environment look like, we are trying to design algorithms with specific kinds of neurons and a more neuro-reason kind of approach. Sensor technology has been well studied for about 10 years, but now the focus is on creating the algorithms that can take advantage of these sensors and exploit their output."

REFERENCES

- A. Eisenmann et al., Proc. SPIE Remote Sensing Conf. 2001, Toulouse, France.

- A. Ananthaswamy, New Scientist (March 31, 2001).

- J. C. Diop, Upstream (October 2002).

Building a better optical sensor

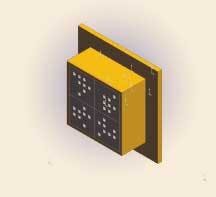

Researchers at Duke University (Durham, NC) are working to improve automated vision systems by developing more-efficient optical sensors for data sensing and processing.1 According to David Brady, professor of electrical and computer engineering and director of the Fitzpatrick Center for Photonics and Communication Systems at Duke, while traditional computer vision systems use relatively sophisticated software and camera equipment, they are limited to fairly simple models of physical space-camera relationships. His group's approach eliminates the need for complicated software and lenses, instead mapping the angles of light radiating from a source by channeling the light through a set of polystyrene "pipes" onto a set of light detectors (see figure).

"In a conventional camera, each focal point corresponds to one point in space, so you need data compression, which is very common in imaging systems today but happens after you sense an image," he said. "What we are trying to do is implement this process in the physical layer of the sensor."

An early prototype monitored a moving light source at a distance of 3 m. The 25.2-mm prototype has 8 viewing angles, 8 detectors, and 36 pipes. Each pipe channels light from a given angle to a detector. Seven of the eight detectors monitor four angles and the remaining one monitors all eight. Each of the eight viewing angles spans 5°, giving the device a 40∞ field of view. According to Brady, the separate angles of the field of view allow for basic digital representations of moving objects, and the detector array design reduces the amount of information the computer must process. His group is now working to track vehicles and people in real time and have produced a prototype that can track cars 15 m away.

REFERENCE

- P. Potuluri, U. Gopinathan, J. Adleman, D. Brady, Optics Express, 965 (April 21, 2003).