Algorithm detects nonwatermarked digital forgeries

In 1919, a photograph was taken of Vladimir Lenin and Leon Trotsky standing in a crowd in Moscow’s Red Square. When the same photograph was reprinted by the Soviet government half a century later, Trotsky-who had in the interim been exiled by Josef Stalin and murdered in Mexico City-was nowhere in sight.

It used to be that image forgers, such as the one who removed Trotsky, relied on airbrushing. Nowadays, with the rise of digital technology, adding or subtracting people and things from photographs can be done by anyone with a computer. With the power that pictures of war and strife hold, and the now-widespread use of pixelated images, the ability to detect digital forgeries is becoming more important than ever.

Embedding a digital “watermark” (a pattern, undetectable by the human eye, that helps reveal alterations) in an image is one way to make tampering harder to get away with. But most cameras do not have the ability to watermark. A group of researchers led by Hany Farid, associate professor of computer science at Dartmouth College (Hanover, NH), is developing several approaches to detecting digital tampering that do not depend on the use of watermarks.1

Detecting resampling

One of the approaches relies on the fact that many alterations of digital photos are performed by copying segments from other photos or from different areas of the same photo, resampling (resizing and/or rotating) the segments so that they fit properly, then pasting them in, perhaps also blending the edges or doing other final corrections. But, while resizing and rotating are both operations that can leave the pasted-in segment looking genuine to the eye, in truth quantitative changes occur.

Merely rotating or resizing an image will cause most of its pixels to take up positions that no longer fit the correct pixel lattice. Resampling fits the image back to the pixel lattice by creating all new pixels that are a blend of pixels from the original image. The operation results in a correlation of each pixel with its neighbors; however, the amount of correlation is not uniform, but varies periodically across the lattice. What the Dartmouth researchers have done is to apply an algorithm-called an expectation/maximization (EM) algorithm-that reveals resampled areas by determining the amount of correlation each pixel has with its neighbors (see figure).

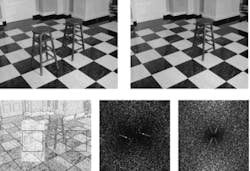

A digital photo is taken of two stools in a room (top left); the photo is digitally altered so that one of the stools is removed (top right). An expectation/maximization algorithm produces a probability map of the image (bottom left). A Fourier transform of the altered region in the map reveals a well-defined periodic component (bottom center), while a Fourier transform of an unaltered region shows no such component (bottom right). The presence of the Fourier-transform spikes indicate that the associated region was resampled.

In a simple example, an image is stretched by a factor of two in one dimension; in this case, all the even pixels in that direction (with “even” picked arbitrarily here) wind up being pixels from the original image, while the odd pixels are all interpolations of their two even neighbors. For this example, all the even pixels show low correlation, while all the odd pixels are pure averages of their two neighbors, and thus highly correlated.

In reality, rotations and resizings are not such a perfect fit. But the EM algorithm shows that there are periodic variations in correlation for resampled areas of any typical rotation and resizing. A 2-D Fourier transform of the algorithm’s map of correlation variations reveals periodic components that not only show the presence of tampering, but also quantifies the amount of resizing in each dimension and the amount of rotation (although with some ambiguities; for example, resizings of 5/4 and 3/4 produce the same periodic patterns).

Another approach developed by the Dartmouth group involves determining the signal-to-noise ratio at many points across an image; portions pasted from a separate image usually have a different signal-to-noise ratio, says Farid. Other approaches that reveal possible tampering include detecting luminance nonlinearities or double JPEG compression of an image.

“We’re not relying on a ‘silver bullet,’ ” says Farid. “Instead, we’re developing a series of tools, each of which can be foiled by experts, but which the average user will have a hard time with. Also, using these tools simultaneously makes tampering harder to get away with.”

REFERENCE

1. A. C. Popescu and H. Farid, Statistical Tools for Digital Forensics, 6th Intl. Workshop on Information Hiding, Toronto, Canada (2004).