Airborne LiDAR system advances 3D mapping, terrain imaging

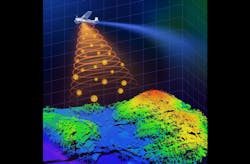

Researchers at the University of Science and Technology of China (USTC; Hefei, China) developed a single-photon light detection and ranging (LiDAR) system that can 3D map objects and terrain with high accuracy, even in tough environments like dense vegetation (see video).

The system works by sending out bursts of light pulses from a laser toward the ground. These pulses bounce off objects on the ground and are then captured by single-photon avalanche diode arrays. These highly responsive detectors boost the sensitivity of single photons for more efficient detection of the reflected laser pulses, and in turn allows a lower-power laser to be used.

“By measuring how long it takes for the light to travel to the ground and back, we can create detailed 3D images of the terrain below,” says researcher Feihu Xu, a professor at USTC.

But the team faced challenges like finding a way to shrink the entire system and reduce its energy consumption on a resource-limited platform.

“We had to find solutions to maintain high imaging performance on resource-limited platforms,” Xu explains. “It meant we had to restrict the laser power and optical aperture, which could potentially compromise imaging quality.”

Perfecting the design

A crucial design in the system for overcoming such challenges involves specially designed scanning mirrors, which capture sub-pixel information of the ground targets via continuous scanning.

In their work, detailed in Optica, the team began with a prototype of the airborne single-photon LiDAR system to conduct pre-flight ground tests aimed at verifying the system's capability to achieve super-resolution imaging through a sub-pixel scanning procedure and computational algorithm that extracts the sub-pixel information from small numbers of raw photon detections. The system was then integrated with other necessary devices in the airborne environment.

The researchers used small telescopes with an optical aperture of 47 mm as the receiving optics to reduce the system’s size. It uses low laser power and a small optical aperture, compared to conventional systems, but Xu says that with the help of that advanced computational algorithm, the system produces high-quality 3D images of the ground.

The team’s compact setup makes it simpler to operate and more versatile. It’s more efficient, as well, using the lowest laser power and the smallest optical aperture, while still maintaining good performance in terms of detection range and imaging resolution.

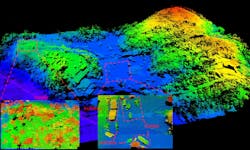

In a series of tests, the system’s LiDAR imaging produced a 15-cm resolution from about a mile away. Adding the sub-pixel scanning and 3D deconvolution to the setup improved that resolution down to 6 cm from the same distance.

Other experiments involved positioning the system on a small airplane for several weeks. This demonstrated its effectiveness in real-world settings by producing more detailed images of ground terrain and objects than have previously been possible.

Steps toward the future

The USTC researchers are now working to further enhance their new system’s performance, which will include making it more stable, durable, and cost effective. It will be necessary for the technology to be commercialized, Xu says.

“In the future, our technique could help deploy single-photon LiDAR on low-cost platforms such as drones, for applications including assessing forest health, monitoring terrain changes following natural disasters, and tracking urban development,” Xu says, all of which “have direct implications for environmental conservation, disaster relief, and urban planning.”

The team’s ultimate goal is to someday install the system on a spaceborne platform such as a satellite. Integration of the technology onto satellites could potentially revolutionize the way information about our planet is obtained. From monitoring climate change to managing natural resources, Xu says the new system’s high-quality 3D imaging capabilities could provide invaluable insights into Earth’s dynamics.

FURTHER READING

Y. Hong et al., Optica, 11, 5, 612–618 (2024); https://doi.org/10.1364/optica.518999.

About the Author

Justine Murphy

Multimedia Director, Digital Infrastructure

Justine Murphy is the multimedia director for Endeavor Business Media's Digital Infrastructure Group. She is a multiple award-winning writer and editor with more 20 years of experience in newspaper publishing as well as public relations, marketing, and communications. For nearly 10 years, she has covered all facets of the optics and photonics industry as an editor, writer, web news anchor, and podcast host for an internationally reaching magazine publishing company. Her work has earned accolades from the New England Press Association as well as the SIIA/Jesse H. Neal Awards. She received a B.A. from the Massachusetts College of Liberal Arts.