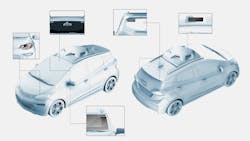

Light detection and ranging (lidar) has garnered a lot of attention recently with system manufacturers applying the technology to a growing number of applications, including land surveying, farming, and mining. However, the most significant buzz is around autonomous vehicles, and for good reason—the combination of a laser, a scanner, and a specialized GPS receiver to rapidly measure positions of different objects makes the technology an ideal fit (see Fig. 1).

Cars adorned with lidar systems driving through the streets of major cities are a look into the future. But it’s obvious we’re not quite there yet—the existing systems are quite bulky and being able to deliver a sleeker package remains an ongoing challenge.

What’s in the way?

While autonomous vehicle technology continues to evolve, challenges with advancing development, implementation, and production exist. Cost, manufacturability, reliability, and functionality remain prominent challenges, according to Hod Finkelstein, chief R&D officer at AEye (Dublin, CA), a developer of sensing and perception systems (embedded with AI and adaptive lidar) for autonomous vehicles (see Fig. 2).“[All] are really critical elements,” he says. “The first category [cost, manufacturability, and reliability], in my opinion, is more critical today. When you manufacture an autonomous vehicle, you can think about the shuttle that is driving students around the university campus—it’s driving them—or you can think really of the high-volume application, which is the cars that we're all driving.”

Finkelstein adds, there is “the challenge of gearing toward high-volume, reliable manufacturing that really can be integrated into a car and not just produce pretty PowerPoint slides and demonstrations.” This can tie to challenges at the manufacturing phase. Some lidar companies go through tier-one auto suppliers, which deliver components and systems directly to the equipment (auto) manufacturers.

Unfortunately, few companies within the lidar space possess these strategic tier-one partnerships. “And it's only through this type of partnership that you can actually hope to get to high-volume automotive production in terms of the functionality of the system,” says Finkelstein.

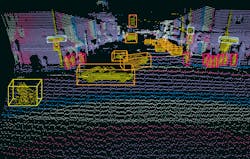

The functionality of autonomous vehicles must include seeing to a very far range—lidar can aid this. “If you're driving 55 miles an hour, you’ll have enough time to stop,” Finkelstein says. “You also need to make sure that you know if there is a guy driving next to you, and he’s trying to cut you off, then you will be aware. You need to make sure that if you are driving on the streets and there is the ball dribbling in front of you, you will stop in time before the kid runs after it.”

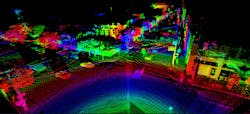

Determining the range has been challenging as well. Some developers believe that 200 m is good, according to Dr. Paul F. McManaman, author of several lidar-focused books and papers, but others are seeking longer ranges. Companies are developing systems with different ranges as well as various testing points when determining aspects such as pedestrian traffic and road debris (see Fig. 3).What’s needed?

It is time for a universal set of standards for lidar systems implemented on autonomous vehicles—and McManaman hopes to develop them. McManaman is a fellow of SPIE (and its former president), IEEE, and Optica, as well as a former chief scientist for the U.S. Air Force Research Lab, where he developed numerous laser detection and ranging (ladar) standards.

With the current lack of universal standards, McManaman refers to auto lidar measurement as the Wild West. For testing lidar systems, McManaman says companies are coming up with different measurement models. “The most stressing case is the little kid who just learned to walk who wanders out between the cars and suddenly, they’re there. What do you do?”

Consider, for instance, the difference between a fast-traveling motorcycle and a child near the road. “A motorcycle is coming really fast, with what’s called an unprotected left on a two-lane road; there’s no turn signal. There’s a lot of metal in a motorcycle—you’ll use radar for that,” McManaman says. “But with a kid, there's no metal in the kid, so radar won’t work in a system like that; light sensors could.”

If an auto company wants to compare performance, there ought to be a standard set of measurements, explains McManaman. “It’s difficult if one does a set of 16 measurements, another does a set at 23, measuring different things. You want to have a benchmark.”

Placement of corner cube reflectors, set in various places, would help study what light and sensors are necessary for an autonomous vehicle lidar system. According to McManaman, larger corner cubes are ideal—rather than smaller ones that only simulate specific things such as stop signs—because they would allow capture of a whole light beam.

“I want to make sure that I measure the field of view of the different light and force [those testing the system] to be at a minimum field of view while they do the measurements,” he says.

Ultimately, seeing a full field of view will be necessary for any autonomous vehicle lidar system.

At this year’s SPIE Defense + Commercial Sensing tradeshow and conference, McManaman plans to delve into the test and measurement, kicking off a several-years-long initiative with the help of SPIE. He has pulled together a community of folks from across the country, including from government, industry, auto manufacturers, university researchers, and those interested in lidar and its use in autonomous vehicles.

Throughout the McManaman-led lidar testing and study, participant feedback will prove critical. Following initial feedback, “a number of months later we’ll talk to people in optical engineering, talk about the system performance. Once we get feedback, can change measurements, do it again, get more feedback, change measurements again.” He anticipates having a set of standards in place for all autonomous vehicle lidar systems within the next few years.

What’s next?

The introduction and adoption of technologies from adjacent spaces is encouraging, explains Finkelstein, citing AEye’s founder and CTO, Luis Dussan. Dussan came from the military and has drawn from a problem existing with some missile systems: how to search in a very large space for a target, then acquire its range and position with very high accuracy and at a high refresh rate with fast updates. “He adopted technologies, for example, from optical communications and from the semiconductor state, and together with a lot of embedded computing, we were able to integrate that into a very small form factor, low cost, and reliable [lidar] system,” says Finkelstein.

The current high cost of these lidar systems for autonomous vehicles is a critical element with some of the leading lidar manufacturers offering components and systems that cost thousands of dollars. Finkelstein is hopeful there will be “an order of magnitude cost reduction” at some point. Now, “it’s really about coming up with architectures and integrating technologies that can ride on the much higher volumes of other markets that facilitate lower costs,” he says.

But there is more to autonomous vehicles’ lidar development than manufacturing costs and price points.

“The richness of the phenomenology and lidar is off the charts,” McManaman says. “Some people do say, ‘Well, why do we need lidar? I do pretty good with my eye.’ My question to them is, ‘Would you rather have 10 times fewer auto deaths in this country?’ [With lidar], we could save 90% of the lives we currently lose.”

A key advantage of lidar technology is that it can take in lots of different modalities of data—more than humans can—and rapidly; it can make decisions faster.

Of course, lidar isn’t the be-all and end-all for autonomous vehicles. “But it’s one of the sensors you need in your portfolio,” McManaman says. “And you would really like to use all of the sensing modalities you can in order to get the best sight picture.”

About the Author

Justine Murphy

Multimedia Director, Digital Infrastructure

Justine Murphy is the multimedia director for Endeavor Business Media's Digital Infrastructure Group. She is a multiple award-winning writer and editor with more 20 years of experience in newspaper publishing as well as public relations, marketing, and communications. For nearly 10 years, she has covered all facets of the optics and photonics industry as an editor, writer, web news anchor, and podcast host for an internationally reaching magazine publishing company. Her work has earned accolades from the New England Press Association as well as the SIIA/Jesse H. Neal Awards. She received a B.A. from the Massachusetts College of Liberal Arts.