Challenges of full autonomy for sensing in autonomous vehicles

Initially only available as options on high-end cars, driver assistance technology has gradually gained acceptance in the mainstream. Adaptive cruise control came in the 1990s, followed by blind spot monitoring, lane control, and automatic braking based on cameras and radar. Collision-avoidance systems that brake cars to prevent rear-end collisions will become mandatory in new cars in the European Union in May 2022 and are becoming standard on many new models in the U.S. Safety analysts predict automatic braking could reduce crash deaths up to 20%.

Integrating and enhancing those features seemed an obvious path to fully automated driving.

Yet an August 2021 poll found only 23% of adults would ride in a fully autonomous car—up from 19% three years earlier, but still disconcerting. Unfortunately, highly publicized fatal accidents have eroded public acceptance. Uber bailed out and sold its robo-taxi development program in December 2020, and Waymo stopped calling its vehicles “self-driving cars” in early 2021. Even the good news that Tesla sold over 900,000 cars equipped with AutoPilot in 2021 was tempered by the fact they are not fully autonomous, and by a National Highway Transportation Safety Board investigation that began in August 2021 of 11 accidents in which Teslas in autopilot mode have killed one first responder and injured 17 more.

It’s time to upgrade the eyes and brains of self-driving cars.

Sensors and artificial intelligence

The eyes are a network of sensors looking in all directions around the cars, while the brains are artificial intelligence (AI) systems running on powerful computers linked to archives of digital maps and other data. The software analyzes sensor data and other information to track pedestrians and other vehicles, predict where they are going, and calculate the path the car should take to drive safely.

Both collecting and processing the data are complex real-time operations. Even the transit time of light is significant; a LiDAR or radar pulse takes a microsecond to make a round trip of 150 m from the source to the target and back. The primary sensors are cameras, microwave radar, and LiDAR, but many cars also use ultrasound for automated parking, and collect GPS signals to locate the vehicle on digital maps.

The overall system is complex to design, integrate, and optimize. In 2020, engineers at the robotics firm PerceptIn (Santa Clara, CA) reported spending roughly half of its R&D budget for the past three years on building and optimizing the computing system. “We find that autonomous driving incorporates a myriad of different tasks across computing and sensor domains with new design constraints. While accelerating individual algorithms is extremely valuable, what eventually mattered is the systematic understanding and optimization of the end-to-end system.”

Cameras for driver assistance and autonomy

Camera sensors are well developed and inexpensive, and hundreds of millions have been sold for advanced driver assistance systems (ADAS) and automated driving (AD) systems. While all use silicon detectors, the details differ between types of systems and car makers. ADAS cameras use small processors mounted behind the rear-view mirror, while AD cameras are mounted around the outside of the car, with data links to a central computer inside the vehicle.

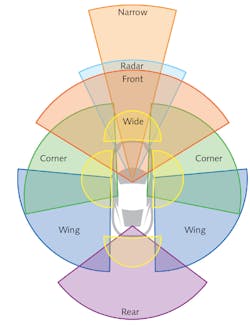

Figure 1 shows typical placement of cameras in the two types of systems. The orange areas show the wide main field of view and the narrow long field of view used in ADAS cameras to look for hazards in front of the moving car. Self-driving car monitors also have additional cameras on the sides and rear for full 360° coverage around the car, with the fields of view for short- and long-distance cameras shown. The field of view for the rear-view camera is in purple.

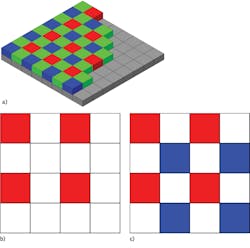

The specialization of cameras goes well beyond the field of view and range of the camera, according to Andy Hanvey of camera chip supplier Omnivision (Santa Clara, CA). Three classes of cameras are used in cars: machine vision chips for ADAS and AD; viewing chips for surround-view and rear-view cameras; and interior chips to monitor the driver. Each type is optimized for specific functions and the detector array is usually filter arrays other than the usual Bayer RGB pattern for color photography (see Fig. 2a).Machine-vision chips provide the AI system with data used to track the positions and motions of other cars and objects nearby. The four-element filters used are optimized for grayscale processing and not displayed to the driver. A common choice is one red element and three clear elements, called RCCC (see Fig. 2b). RCCC images look monochrome to the eye and can’t record color images. The three clear filters give it the best sensitivity, while the red filter element identifies red light from brakes or traffic signals.

Another option is RCCB, with one red filter, two clear filters, and one blue filter (see Fig. 2c). This is attractive when the camera output is fed both to the machine vision processor and to a driver display showing rear or side views of the car’s surroundings, where color is needed. A similar alternative is the RYYCy filter, with red, yellow, yellow, and cyan elements, which also can be used for color reproduction or recording in vehicle DVRs, available in some high-end cars.

Other important features for machine vision include the field of view, resolution, and dynamic range. Full autonomy pushes demand to higher levels, including pixel counts of 8 to 15 million, fields of view of 140° to 160°, dynamic ranges of 120 to 140 dB, and good low-light performance for night driving. Pixel-count requirements are lower for rear- and side-view images displayed for the driver.

The third class of cameras monitor driver alertness and attention, and the rest of the interior for security. These applications may use another filter configuration RGB-IR. The near-IR portion of the silicon range can monitor light reflected from the driver’s face, which is not distracting because the driver can’t see it. The visible light can be used for security or other imaging.

Distracted or inattentive driving has long been recognized as a safety problem and was evident in early fatal Tesla accidents. Tesla has made driver monitors standard features, as have General Motors with its Super Cruise line and Ford with its BlueCruise cars. The European Union is going further—in 2024, it will require all new vehicles sold to have a driver monitoring system that watches the driver for signs of sleepiness or distraction.

Weather limitations

Cameras depend on visible light, so they are vulnerable to the same environmental impairments that can impair human vision, from solar glare that dazzles the eye to fog or precipitation that absorbs light and reduces the signal. Digital cameras used in autos have dynamic ranges of 120 dB (a bit depth of 20 in CCDs) or more, similar to the nominal 120 dB range of the human eye spanning both night and day vision. However, their peak color sensitivity differs; the human eye is most sensitive to green, but silicon color sensitivity is more even, so RGB photographic cameras use two green elements to each red or blue element to replicate human vision.

Ambient light levels can differ by more than 120 dB, which can pose problems for camera sensors. Engineers for auto supplier Valeo (Paris, France) have developed a dataset for classifying weather and light classification, but say much more work is needed.

Shadows can change with the weather, time of day, and the seasons, making them tricky for both humans and cameras. Figure 3 shows how shadows can conceal a raised curb from view by reducing contrast between curb and asphalt at certain times of day and viewing angles. The shadows could cause drivers to hit the curb and damage tires during the few hours they covered the curb.Stereo camera distance measurements

Stereo cameras can measure distances to objects by taking images from two separate points and observing how the position of the object in the picture differs with the position. The range to the object is proportional to the distance between the two points where the photos were taken. For digital cameras, any error in alignment of the rows of pixels in the right and left cameras degrade distance estimates.

“Even a 1/100th of a degree shift in the relative orientation of the left and right cameras could completely ruin the output depth map,” says Leaf Jiang, founder of startup NODAR Inc. (Somerville, MA). For automotive lidar, that shift must remain stable for the life of the car. The longest spacing at which that stability is possible with state-of-the-art mechanical engineering is about 20 cm, which limits range measurement to about 50 m.

To extend the spacing between cameras and thus the range of stereo cameras, Jiang says NODAR developed a two-step software process for stabilizing the camera results frame by frame. First, they calibrate or rectify the images to a fixed frame of reference, then they solve the stereo correspondence problem for each pair of pixels. This allows stereo cameras to be up to 2 m apart—the width of a car—yielding precise range measurements out to 500 m, which is 10X the range for conventional cameras. The cameras don’t have to be bolted onto a single frame, they just need to be mounted with overlapping fields of view, typically at the front of the car’s right and left sides.

A single processing system does both imaging and ranging. Color cameras can generate color-coded point clouds. The system densely samples the field of view, so it can spot and avoid small objects such as loose mufflers, bricks, or potholes that could interfere with the vehicle. The long distance would be most important looking forward and backward, Jiang says.

Microwave radar

The low cost of microwave radars makes it easy to deploy several around the car to measure distance, and they can see through weather much better than visible light. High-frequency radars in the 77 GHz band can measure long distances for driving; shorter-range 24 GHz radar can aid in parking or monitoring blind spots. However, radars have problems identifying and tracking pedestrians and determining heights, so they can be confused by bridges and signs above the road or trailers with open space below them. Those limitations have made additional sensors a must in ADAS and AD.

Mobileye (Jerusalem, Israel), founded to develop computer vision systems, plans to give radar a bigger role to take advantage of its low cost. According to Mobileye founder and CEO Amnon Shashua, current “radars are useless for stand-alone sensing [because they] can’t deal with tight spacing.” To overcome this, the company is developing software-defined radars configured to have higher resolution. “Radar with the right algorithm can do very high resolution” over distances to 50 m with deep learning. Mobileye says their developmental radars can nearly match the performance of LiDAR.

Outlook

Advanced sensing systems require advanced processors designed for vehicle applications. Nvidia (Santa Clara, CA) recently announced NVIDIA Drive, designed to run Level 4 autonomous cars with 12 exterior cameras, three interior cameras, nine radars and two LiDARs. The specialized processors and software are the essential brains that process the signals gathered by the sensors that serve as the eyes of autonomous cars.

While assisted driving and sensors are doing well, autonomous driving is struggling because AI is having trouble understanding the complexities of driving. AI has beat chess masters and other games with complex, consistent, and well-defined rules that test human intelligence. But AI can fail in driving because the “rules of the road” are neither clearly defined nor well enforced, and AI doesn’t deal well with unexpected situations, from poorly marked lanes to emergency vehicles stopped in the road with lights flashing. Nothing says bad driving like crashing into a stopped fire truck, and autonomous cars have done that more than once. It’s no wonder that the public is wary of riding in cars with a record of such embarrassing accidents. One of the big challenges facing the industry is convincing the public they can do better.

About the Author

Jeff Hecht

Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.