Teaching computers to recognize faces

Government and industry want to use facial recognition, but are the algorithms ready for the applications? Developers-aided by government programs-are producing sophisticated approaches in image processing for facial recognition.

Human brains are optimized to recognize and distinguish between faces-we can identify people we know, even with pliable features, changing facial hair, glasses, or makeup, even under different illumination or from different poses, and even after not seeing a person for years. Computers, however, can sort large data sets faster than humans.

Organizations involved with government antiterrorism and corporate security want to recognize people using biometrics of some sort. So why use faces? Because humans recognize each other by face, faces are always visible, and a picture of the face can be acquired without intruding on the person’s privacy. In addition, facial recognition is one of the few biometrics that can easily be checked by a human, and faces are already used for many security applications including passports and drivers licenses.

Facial recognition is already commercialized. In 2002, the facial-recognition market generated $21.5 million, according to Frost & Sullivan’s World Face Recognition Biometrics Markets published in 2003. But is it ready for large, critical applications such as automated passport verification?

The government-sponsored Facial Recognition Vendor Test (FVRT) run by the National Institute for Standards and Technology (NIST; Gaithersburg, MD) is encouraging improvement in facial recognition through impartial testing and a Grand Challenge.

What is the task?

Facial recognition typically involves several tasks. Verification checks that a sample matches a reference, such as whether a traveler’s face matches his passport photo. Identification compares a sample to a number of references to look for a match. The process of matching a face against a “watch list” contains elements of verification and identification: first the image of the unknown person must be checked against the watch list, and if there is a match, then the system must provide the identity.

How well a program works depends on whether it can find matches between samples and references, and how it fails. Some percentage of imposters will be incorrectly matched to valid users; these false positives are a major security concern. Conversely, the rejection of valid users, or false negatives, can cause great frustration for valid users.

NIST’s Grand Challenge

NIST is running a series of facial-recognition tests, supported by many government agencies. The first test was in 2000, the most recent was held in 2002, and the next test will be held in August and September 2005.

The FRVT 2002 assessed the capability of mature, automated face-recognition systems to meet requirements for large-scale real-world applications using a database of more than 37,000 faces. While only commercial vendors participated in the 2002 test, NIST has encouraged academic researchers to submit systems for the 2005 test, as well as encouraging vendors from 2002 to return.

According to P. Jonathon Phillips, director of the program, developers claim new face-recognition techniques developed since FRVT 2002 are capable of an order of magnitude increase in performance over those in FRVT 2002. Phillips proposed to put these claims to the test in the Facial Recognition Grand Challenge, which is intended to spur development of algorithms that are markedly better than the best performers in the 2002 test. The specific goal is to verify 98% of the faces in the test but keep the false-positive verification rate down to 0.1%.

“The most prominent of these new techniques,” Phillips says, “are recognition from high-resolution still images, multisample still images, three-dimensional (3-D) face scans, and sophisticated preprocessing techniques.”

The FVRT 2005 will assess these, as well as provide a direct comparison of face recognition between humans and computers. It turns out, says Phillips, that while humans are very good at comparing people we know, humans are not so good at comparing faces we don’t know.

Results of the test will be available in early 2006.

Correlating features

Facial recognition is an intriguing and complicated pattern-recognition problem. All facial-recognition systems look for strong correlations between images that indicate that two (or more) images are of the same face. The specifics of how they do are many and varied. While few commercial vendors share specifics of their algorithms, a typical system obtains the sample image, finds a face, preprocesses it, and then compares processed data in some way to reference data.

Preprocessing can include correcting for pose and illumination, aligning the face imagery, equalizing the pixel values, and normalizing the contrast and brightness-and throwing away data. One image can have several thousand pixels, each with intensity and color information. Humans don’t need that much information to identify faces-and a study linked in the FRVT 2000 report suggests that images can be compressed to tenths of their original data without degrading performance of computer-recognition algorithms.

Some vendors advertise how small a face data file can be-less than 100 kbyte in some instances. However, extreme compression may not work for the new algorithms, which include high-resolution images. And given computing and networking advances during the past decade, databases of large image files (that once might have choked a computer system) are not too big to handle.

First-generation methods

First-generation algorithms that proved useful for large databases include Eigenfaces, Fisherfaces, Bayesian intrapersonal/extrapersonal classifiers, and elastic bunch graphing.

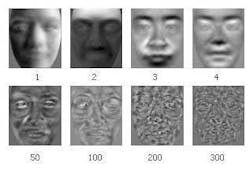

Eigenfaces, also known as principal component analysis (PCA), was developed at the Massachusetts Institute of Technology (MIT; Cambridge, MA) in the mid-1990s. An Eigenface representation is generated from the principal components of the covariance matrix of a training set of facial images. This method converts the facial data into Eigenvectors projected into Eigenspace (a subspace), which allows considerable data compression because surprisingly few Eigenvector terms are needed to give a fair likeness of most faces. The method catches the imagination because the vectors form images that look like strange, bland human faces (see Fig. 1). The projections into Eigenspace are compared and the nearest neighbors are assumed to be matches.

The Fisherfaces algorithm, also known as linear discriminant analysis (LDA), was developed at the University of Maryland (College Park, MD). This method is much like PCA, but with changes that make differences between faces more evident.

Instead of looking for the nearest neighbor in a subspace (like PCA and LDA), the Bayesian intrapersonal/extrapersonal classifier looks at the distance between two face images. The difference images can fall into two classes: they are either derived from two images of the same subject or derived from images of different subjects. Each of the classes forms a distribution that is roughly Guassian. Distributions can overlap without confusion.

All of these algorithms take appearance-based holistic approaches, looking at the gestalt of a face. This group of methods doesn’t tend to handle occlusion gracefully. It does, however, perform well when there are variations in expression and the presence of glasses.

Finally, elastic bunch graph matching, which is based on an algorithm developed at the University of Bochum (Germany) and University of Southern California (Los Angeles, CA), recognizes novel faces by first localizing a set of landmark features and then measuring similarity between these features. Similarity between novel images is expressed as a function of similarity, corresponding to facial landmarks. Compared to holistic approaches, feature-based methods are less sensitive to variations in illumination and viewpoint and to inaccuracy in face localization.

New methods

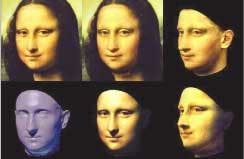

In 1999, Volker Blanz and Thomas Vetter presented a paper at SIGGRAPH (International Conference on Computer Graphics and Interactive Techniques; Los Angeles, CA) that described a way to create a “3-D morphable model” of a face from a single 2-D image (see photo, p. 125). This method is useful for computer graphics, but has also found use in facial recognition-partly because it solves problems regarding pose and illumination (see Fig. 2). The morphable models first showed up in systems at FVRT 2002.

FIGURE 2. 3-D morphable models correct for pose variation in face recognition, significantly boosting recognition. The nonfrontal face images (top) are input to 3-D morphable models, which transform them into the bottom frontal images. Face-recognition performance was computed from both the original and morphed images. For the best performers in FRVT 2002, the average identification rate for left- and right-rotated faces was 30%. For the faces after transformation to a frontal view, the average identification rate was 72%. For up- and down-rotated faces, the average identification rates before and after transformation were 40% and 70%, respecively.

From a 2-D image, a morphable model estimates the shape of a face,” Phillips explains. “We know that rotating a 3-D shape to a frontal view improves performance. From a practical point of view, it is very effective.”

The researchers haven’t tested to see whether the 3-D model is true to life. They are, however, looking into methods for capturing 3-D information directly. These include using a stripe of (incoherent) light across the face and stereo imaging. These methods provide both shape information and texture (the reflectance off parts of the face-the information available from 2-D images).

High-resolution images can also provide better facial recognition. Today’s consumer-grade cameras contain 5- to 6-megapixel imagers, which provide far more data than digital systems of the mid-1990s when many algorithms were developed. In the early tests, most of the faces had only 60 pixels between the centers of the eyes-today it’s not uncommon to have 250 pixels. The new data may provide better ways of recognizing faces.

Another possibility is to take multisamples, or multiple images, at the same time. An experiment in Australia’s Smartgate airport security program proposes using more cameras, rather than using either 3‑D imaging or high resolution. If this method works well, then it could be less expensive than the alternatives.

Systems to be tested in the FVRT later this year may meet the Grand Challenge using 3-D, high resolution, or multisampling. The new methods cope with illumination and pose difficulties, although recognizing faces outdoors is still a challenge. Other problems, such as recognizing a face from an image taken years before, have barely been addressed. Facial recognition needs to become more robust, but it is making steady progress with active research in the field and developments in standardization and testing.

FURTHER READING

W. Zhao et al., Face recognition: A literature survey, ACM Computing Surveys, 399 (December 2003).

Face Recognition: From Theory to Applications, ed. H. Wechsler et al., 3, Springer-Verlag (1998).

Handbook of Face Recognition, ed. Z. Li and A. K. Jain, Springer-Verlag (2004).

Face Recognition Vendor Test website at www.frvt.org and Evaluation of Face Recognition Algorithms website at www.cs.colostate.edu/evalfacerec