Multi-CPU architecture speeds ray tracing

Michael Gauvin and Donald Scott

Tracing rays is an inherently parallel activity because each ray is a statistically independent entity. In fact, for many years it has been common practice for optical engineers to divide a long ray trace involving a large number of rays into a set of smaller calculations involving fewer rays, and then to run the calculations on multiple computers. Splitting the ray trace and recombining results is time-consuming and error-prone, however, and is only feasible if several computers are accessible and available.

A potential solution might involve merging several computers onto a single chip to provide the equivalent of multiple computer capability on the desktop, which is exactly what Intel (Santa Clara, CA) and AMD (Sunnyvale, CA) have done with their latest dual-core and quad-core CPUs.

The battle for speed

Almost 20 years ago, the first generations of Intel processors used a separate coprocessor chip to handle floating-point operations. In the 486 architecture, the mathematical coprocessor was integrated into the 486 chip. Now Intel and AMD chips utilize multiple-core architecture in which a single processor chip can have two or more “execution cores,” or computational engines; each core has its own CPU with its own resources and the ability to use any and all resources in the computer. In the future, AMD and Intel are promising hundreds of processors on a single die possibly speeding engineering tasks by a factor of 100 or more.

In recent months, prices have come down to the level at which multiple CPUs with multicore (two to four CPUs) desktop computers are becoming more affordable. As multicore and multiple-CPU computers become commonplace, software developers, ever interested in exploiting the latest hardware technologies, are writing code to take advantage of this new capability.

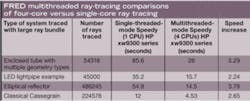

Because of this trend, optical-engineering software has become available in some programs, such as FRED from Photon Engineering, that incorporates multithreaded algorithms, making it possible to run single separate ray traces on each core and to accelerate ray-tracing speed by a factor almost equal to the number of cores and CPUs in the computer, minus the overhead associated with creating and managing these multithreading tasks. In four different actual systems, multithreaded algorithms sped up ray tracing by factors of 2.24 to 3.79 over single-threaded single-core raytracing (see table).

null

Two considerations

The basic idea behind multithreading is to allow multiple programs to perform computations simultaneously and in a manner transparent to the user, provided they don’t conflict in accessing shared resources. The challenge for the programmer is to divide the calculation into minimally conflicting threads. There are two considerations that can arise in the implementation, however.

The first consideration is thread-local variables. Rays are propagated through the same geometry, so they share the system’s description, including geometry, materials, coatings, and so on. While the system physically doesn’t change during the ray trace, some of its internal quantities can change, raising the possibility for conflict. For example, the refractive index of a material computed for the last wavelength traced can be stored to avoid recalculating it, if the next ray has the same wavelength. To avoid conflict, each thread can store its own refractive-index value; in this case the refractive index is said to be “thread local.”

The second consideration, locking, involves situations in which a conflict between the threads can be harder to avoid. For example, new rays can be created during the trace when a ray-splitting operation takes place (as in the case of one ray intersecting a surface to produce a reflected and refracted ray). These new rays are added to the ray buffer so they can eventually be traced; but they can be traced by a thread other than the one that created them. It is important to ensure that only one thread is adding (or accessing) the ray buffer at a time. This can be accomplished by putting “locks” around the few critical sections of code where the ray buffer is being changed or accessed by a thread. An alternative approach might be for each thread to have its own “thread-local” ray buffer, but this can have load-balancing-related issues.

Applications

Tracing millions of rays in illumination systems or analyzing stray light for many off-axis angles are major applications in which multithreaded ray tracing can yield substantial dividends.

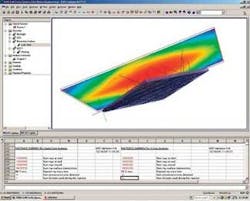

A user simulating an LED backlight for uniformity issues, for instance, can trace 1 million rays in 1.5 minutes on a single core (see Fig. 1). Simulating this same system using multithreaded algorithms and a quad-core computer accelerates this ray trace by a factor of 2.75, 72% efficiency per core.

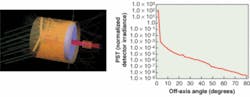

Stray-light studies in which a source is placed around the entrance aperture of a telescope at multiple zenith and azimuth angles is another primary application for multithreading. A full PST (point-source-transmittance) curve characterizing the off-axis rejection properties of a system can take days to trace 100,000 rays through one-degree steps in the zenith angle and through 0°, 45°, 90°, 135°, and 180° azimuth-angle steps. Doing this same calculation on a machine that has four cores reduces the time required for this task, in the case of a classical Cassegrain, for instance, by a factor of 2.65.

null

MICHAEL GAUVIN is vice president of sales and marketing and DONALD SCOTT is senior software developer at Photon Engineering, 440 South Williams Blvd., Suite 106, Tucson, AZ 85711; e-mail: [email protected]; www.photonengr.com.