Image processing for remote sensing needs more than software

During the past 30 years, image-processing software has blossomed into an essential tool in remote-sensing applications, and its uses continue to grow both in number and diversity. As far back as 15 years ago, the first commercial books appeared to help earth scientists keep up with the improvements that computers were bringing to the discipline of remote sensing.

Computer capabilities have continued to grow at an almost dizzying pace, however, and the author of one of those first publications to break the commercial ice has written another book. The new book, Remote sensing—models and methods for image processing (Academic Press, San Diego, CA, 1997), doesn`t present a new method, says its author Robert A. Schowengerdt, an associate professor in the electrical and computer engineering department at the University of Arizona (Tucson, AZ). It simply recommends a way of approaching image processing that retains the connections between the sensing algorithm and the physics of the environment that is being sampled.

The growing availability of robust algorithms for remote-sensing applications makes it easy to do things without thinking, Schowengerdt says. Thus the focus of his book is to get the reader to think about algorithms and use them more intelligently and more effectively (see "Old technique improves new algorithms").

Remembering the good old days

Using computer algorithms intelligently was not even an option during the Apollo earth-orbiter missions of the 1960s. At that time, the imaging systems consisted of multiple Hasselblad cameras, each fitted with a spectral filter for a particular frequency band, says Schowengerdt, who worked as a graduate student on one of those missions. Multispectral images were obtained by superimposing the negatives optically to form a composite. Digital processing didn`t arrive until the early 1970s with Landsat, which placed the first civilian multispectral scanner into earth orbit. Despite Landsat`s global coverage and digital images, most of the people who were interested in the data still had to examine them in the form of NASA photographs.

By the late 1970s, a number of government and university sources were developing remote-sensing software. Much of the stimulus and funding came from the agricultural need to monitor crops and to predict yields as early as possible in the growing season. In the 1980s, mainframe computers gave way to minicomputers, and software from research sites such as the Jet Propulsion Laboratory (Pasadena, CA), US Geologic Survey (Denver, CO), and Purdue University (West Lafayette, IN) became available in the public domain. Even with the switch to minicomputers, most of the programming was still done in Fortran, and most of the processing was still done in batch mode.

Affordable interactive processing was ushered in during the late 1980s with the arrival of PCs and Macintoshes. The early 1990s also brought Unix workstations and improvements in desktop systems. Subsequently, a lot of effort was invested in porting information from existing software libraries into languages and interfaces compatible with the new hardware.

Researchers at Purdue developed MultiSpec, a multispectral package for desktop use that focuses on mapping and classification. Schowengerdt`s group at Arizona developed a complementary desktop package, MacSADIE, for image processing and enhancement. While these, along with similar programs and graphical user interfaces such as Tcl/Tk, are generally available on the Internet either for free or for a nominal fee, they are not competitive with serious commercial offerings for image processing, says Schowengerdt. Older, established general-purpose commercial software that has been available for image processing since the 1980s includes ERDAS IMAGINE (Atlanta, GA), ER Mapper (San Diego, CA), and PCI EASI/PACE (Toronto, Canada). Newer commercial software is also adding capabilities such as hyperspectral imaging and customizable modularity (see "Software to suit every taste").

Finding room for the new stuff

Hyperspectral image processing is possible with a public package called SIPS, created at the University of Colorado (Boulder, CO), or with a commercial package called ENVI, from Research Systems, which is also in Boulder. Hyperspectral imaging provides almost continuous spectral viewing based on a dispersive element that acts like a grating or prism, Schowengerdt says.

Two-dimensional spatial imaging is performed by taking a line of pixels along the ground instantaneously in all spectral bands. One axis represents the spatial pixels and the other represents the spectral dimension. As the sensor moves, it generates a lot of data—more than 100 megabytes per image. "This is one of the most-promising systems now because of the high spectral resolution," says Schowengerdt. Data-acquisition packages that are even more powerful have been developed for government use, and the difficult task for most users will be in sorting through and managing tremendous quantities of available data.

Despite all of the improvements to date, as well as continued research in the area of neural networks, tools for processing the quantities of data produced by hyperspectral imaging systems are still pretty crude, Schowengerdt says. It`s analogous to the situation for multispectral data-processing tools in the early 1980s. Progress in this area has been slow in the last few years, because so much energy has been diverted to the task of handling the increasing amounts of data that are already available.

Rummaging through libraries

Another area of software development is the growth of modularity. In his book, Schowengerdt describes software packages such as PV-WAVE from Visual Numerics (Boulder, CO), IDL from Research Systems, and MATLAB from The MathWorks (Natick, MA) as libraries that toolbox programs such as ENVI, in the case of IDL, or the Image Processing Toolbox, in the case of MATLAB, call upon for special image-processing applications.

The toolboxes have an advantage over the more-traditional programming methods provided by ERDAS and ER Mapper—they offer the ability to quickly build applications. The more-modular methods can be limited by the contents of the original library, but with programs like IDL and MATLAB, the customer has access to the source code and can write his or her own routines, making the software easy to upgrade, Schowengerdt says.

Source code for ERDAS and ER Mapper is proprietary, so the companies must provide assistance for users who want to write new routines. But these traditional programs can provide a more natural programming feel because they are not broken into modules. Ultimately, a major aspect of choosing a programming environment will depend upon how individual preferences and needs dictate the compromise that must be made between modularity and integration.

New approach to software

Over the long run the commercial programs are likely to become more modular. Yet the need to maintain the natural feel and understanding in an algorithm-heavy environment was one of the motivating factors that led Schowengerdt to totally rewrite his original book, not just revise it. The early books were written for electrical engineers, not earth scientists, who generally didn`t have the same mathematics and programming background. Schowengerdt`s first book, Techniques for image processing and classification in remote sensing, published in 1983, was written to introduce earth scientists to the technical aspects of image processing.

Due in large part to the tremendous increase in algorithmic capabilities since the publication of that book, Schowengerdt shifted his perspective to counter the notion that technically oriented people might be required to rely too heavily on software. Just as earth scientists often don`t have the mathematics background necessary to understand algorithms, engineers don`t always have an appreciation of the physics, he says. "In writing this book, I tried to cross the boundary and provide something of value to both."

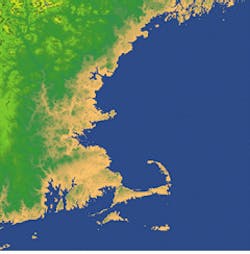

Image-processing software allows images such as this one of Cape Cod to be obtained for satellite control systems using CCD cameras instead of more expensive, specialized sensors.

Old technique improves new algorithms

A technique developed in the early 1980s to improve image processing through understanding of physical properties is finding application today in remote sensing. The technique, which turns on an equation for computing radiance at a sensor, suppresses signals from topographic shading on image pixels that act as noise when calculating band ratios of radiance.

The equation for radiance at a sensor is

Ll(x,y) = Alr(x,y,l)cos[q(x,y)] + Bl

where L = radiance at the sensor, l is the wavelength, x and y refer to the surface topography, A is a proportional constant, r is the reflectance at the surface being measured, q is the angle between the vector normal to the measured surface at each point and the vector of incident sunlight, and B is the atmospheric path radiance. The value for B differs from band to band and is greater toward blue, due to atmospheric scattering.

While it is possible to calculate band ratios between two different bands, l1 and l2, through simple signal-processing algorithms, the result will be imprecise, because differences in the B terms will prevent the cosine terms from canceling. An understanding of the physics, however, allows one to estimate path radiance for each wavelength and thereby subtract out the appropriate B term in each equation before taking the ratio.

Software to suit every taste

Users of image-processing software for remote-sensing applications can be opinionated about their preferences for certain software packages. Both IDL and MATLAB are available at the Johns Hopkins University Applied Physics Laboratory (Baltimore, MD), said Nicholas Beser, a senior member of the professional staff. Some supervisors insist strongly on using one, while others insist strongly on using the other.

"I honestly believe we`re looking at almost a religious issue," said Beser, who could point out significant advantages for both programs. Beser`s own preference for MATLAB actually grew out of a period of a couple of months when he was homebound due to a spinal injury, and a PC-based version of MATLAB was the only program that he had available for continuing his work. "My only outlet in terms of doing work was a laptop that had MATLAB on it," he said. "So while I was at home, I did the beta testing of the Image Processing Toolbox."

Steve Mah, vice president of ITRES Research (Alberta, Canada), prefers IDL-based ENVI because of the "robust algorithms," as well as the hyperspectral imaging for mapping of forest resources for private companies and government agencies in British Columbia. For data fusion and GIS integration, however, Mah tends to use PCI EASI/PACE. While the PCI program is older and more established in some areas, the newer ENVI is developing rapidly and becoming quite powerful, he said.

Richard Paluszek, president of Princeton Satellite Systems (Princeton, NJ) said that a lot of the preference for software systems depends on an individual`s preferred work style. Paluszek designs attitude-control systems for satellites and prefers working with the MATLAB toolboxes because he`d rather write code than use the block diagrams that come with other packages. The data structures provided with the newly released MATLAB 5 allow him to work with complicated collections of data and pass them to a function using only one parameter instead of several, he said.

About the Author

Hassaun A. Jones-Bey

Senior Editor and Freelance Writer

Hassaun A. Jones-Bey was a senior editor and then freelance writer for Laser Focus World.