OPTOELECTRONIC APPLICATIONS: DEFENSE & SECURITY - Intelligent video makes real-time surveillance a reality

For years, security guards in movies and on television have often been portrayed as bumbling fools or sleepy senior citizens. Truth is, sitting in front of a bank of video monitors for hours at a time is a mind-numbing job, rife with opportunities for “the bad guys” to get away with nefarious acts and leaving law enforcement with hours of tape to slog through after the fact as they search for clues to “who dunnit” and what “it” is.

But the marriage of analog and digital video with artificial intelligence is changing this scenario. From homeland security to home-based surveillance, the use of intelligent-video (IV) systems is proliferating worldwide. In addition to the increased emphasis on homeland security since 9/11, the IV surveillance trend is being fueled by innovations in machine vision, digital cameras, image sensors, computers, and data-processing software (see “Artificial intelligence + cameras = intelligent video”).

“(Traditional) closed-circuit television operators are overloaded with video content that cannot be effectively monitored,” said Simon Harris, senior analyst with IMS Research (Wellingborough, England), which is forecasting the world market for video-content analysis to grow from $68 million in 2004 to $839 million in 2009. “Experiments have shown that after 22 minutes, operators miss up to 95% of all scene activity. We need intelligent video to improve the effectiveness of surveillance systems and ease the burden on the operators.”

Thus, for example, in addition to monitoring the comings and goings at a particular site, IV systems can detect a threat and trigger an alarm or send a video clip of the incident to a responder, telling them to take action. In addition, these systems can be programmed to sound an alarm when a person or vehicle enters a restricted zone or when a briefcase or other object is left unattended. In fact, one of the biggest differences between a conventional closed-circuit TV (CCTV) system and an IV system is the ability to perform content analysis.

“You want video not just to respond or enable after-event analysis—you want to be able to prevent or deter a terrorist act because you detected individuals in a precursor surveillance mode or early stages of an operation,” said David Stone, retired U.S. Navy rear admiral and former assistant secretary of homeland security for the U.S. Transportation Security Administration. “Intelligent video is raising the bar on what security can do, plus reducing the need for personnel.”

Even with an analog IV system, the data acquired is considered “metadata”—it can be stripped of redundancies and irrelevant content. This ability is important because in video surveillance valuable security information is usually dwarfed by background elements, whether static (buildings and roads) or dynamic (such as wind and waves). With the use of IV algorithms the background noise can be filtered out, allowing the software to focus on suspicious people or vehicles.

“The software can be tailored to trigger the pager or screen to immediately highlight an area that needs to be looked at or responded to, such as a certain movement, or more than one individual going through a door or facility after someone uses a biometric or code,” said Stone. “It has to be written to reflect the real-time queuing needs of the location so when it ‘sees’ a certain movement or activity, it can signal the operations center to put a person on it.”

Global applications

Today, 2-D and 3-D IV systems are being used by the military in Iraq and Afghanistan for perimeter surveillance; shipping ports, airports, rail yards, subways; hospitals, hotels, theme parks, and sporting facilities; oil refineries; and corporate and residential buildings. Security personnel at airports in San Francisco, Salt Lake City, and San Diego, for example, have implemented a software package from Vidient Systems (Sunnyvale, CA) that enables their video surveillance systems to monitor what the cameras are seeing and flag suspicious events (see Fig. 1).

“What the system does first is look through the camera and draw a picture of the scene as it is ‘normal,’ given the time of day,” said Brooks McChesney, president and CEO of Vidient Systems. “It then can begin to understand how the scene changes over time, whether rain or snow or bright sunlight or night, and draws a map of the normal course of events and where objects should be. So, say you have a system focused on the Mona Lisa. You would want to know if someone moves that picture or is standing there longer than the allotted time or leaves a bag or is sneaking around or goes out the wrong way . . . our system can detect these things and distinguish the correct flow from incorrect.”

Intelligent-video technology is also playing a role in Chicago’s “Operation Virtual Shield”; the city is laying hundreds of miles of optical fiber and placing cameras in multiple locations to feed video into a central review and processing facility for real-time surveillance. On an even grander scale, IV is at the heart of “Secure Border Initiative-Net,” a surveillance system being deployed along the U.S. borders. A key feature of Secure Border will be video cameras with infrared, low-light capabilities, and other “intelligent” features, such as detectors for heat, motion, and biological and chemical agents.

The specific security requirements determine what rule sets are programmed into an IV system. In airports and other public-transit hubs, for instance, the most common events an IV system looks for are “tailgating” (one person following another through a door) and suspicious objects left in secure areas. Vidient’s SmartCatch system enables a camera to detect whether two people pass through an open door.

“In airports the primary thing is access control,” McChesney said. “Maintenance people go around the airport and collect trash and then have to dump it in a centralized location. So being able to see two moving objects going through a door when only one human is allowed becomes a tricky thing. The ability to discern between a human and a trash can is important because in the security world false alarms are worse than no alarm at all.”

Upping the ante

Other types of installations require more-complex rule sets. For example, the National High-Speed Railway in Spain is using the ObjectVideo VEW automated surveillance system from ObjectVideo (Reston, VA) to monitor video streams for suspicious activities, events, or behaviors, and trigger the appropriate response. The railway’s security personnel are able to set rules using virtual “areas of interest.”

“Imagine a standard closed-circuit television system but you’re adding a layer of intelligence somewhere in the system that is watching those video feeds for you,” said Alan Lipton, chief technology officer at ObjectVideo. “As a user, you specify what constitutes your security policy or a threat to your environment and then direct the system to sound an alarm if something important is detected.”

Neptec (Houston, TX) is taking a different approach, leveraging its expertise in 3-D vision technologies in the space industry to enhance surveillance in security and industrial applications. Neptec uses laser-based sensors and cameras embedded with proprietary triangulation algorithms to make measurements of the shape and placement of an object in an environment (see Fig. 2). According to Iain Christie, vice president of business development at Neptec, this approach is not an alternative to IV but an adjunct, especially for security applications involving mobile robotic platforms for air and ground reconnaissance.“Our approach is not about making pictures but increasing the information content of the data stream,” Christie said. “Because you are not dealing with light, intelligent 3-D defeats the problem of perspective. In conventional imaging, if you move the sensor, the scene looks totally different; none of the shapes have changed, but the picture has. Three-dimensional vision measures the shape every time, which overcomes this problem. It can also deal with depth perception and determine when something is behind something else and if the size has changed.”

Artificial intelligence + cameras = intelligent video

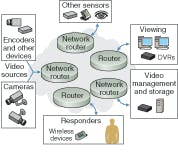

Intelligent-video (IV) systems come in a variety of configurations, from simple video motion detection to sophisticated object tracking and space recognition. At the hardware level, the components do not differ much from those in conventional video-surveillance systems: video cameras (analog or digital) for acquiring data, encoders and servers for data processing, routers for transport control, monitors for viewing, video recorders for storage, and some sort of software to manage the whole system (see figure).A typical IV system works by first acquiring the raw pixel data from a camera or CCD imager; converting the data into digital data (to be processed in the camera) and analog data (to be transmitted to the capture card). From there the analog signal is digitized so it can be processed by the computer and the IV algorithms applied. By operating over existing IP (Internet) networks, each camera becomes essentially an intelligent Web image-acquisition device that can analyze and act upon the video data at any point in the network.

More recently, IV products that work with true digital CMOS IP cameras have been introduced, eliminating the analog-to-digital conversion step; for example, ObjectVideo has collaborated with Texas Instruments (Dallas, TX) to integrate its video-content analysis algorithms on TI’s DM64x digital-media processors, enabling analytical capabilities to reside directly on video cameras, DVRs, network encoders, and other video-management platforms. Because more intelligence is embedded into the cameras, the cameras can be programmed to identify a target by type and then pan and zoom to provide more detailed and relevant information to those charged with reviewing and responding to the perceived events. It also reduces the need for a centralized server and other hardware requirements and improves the use of network bandwidth because the camera can determine when an event of interest occurs and then transmit only the relevant data.

About the Author

Kathy Kincade

Contributing Editor

Kathy Kincade is the founding editor of BioOptics World and a veteran reporter on optical technologies for biomedicine. She also served as the editor-in-chief of DrBicuspid.com, a web portal for dental professionals.