Scalable quantum systems: Engineering modularity and connectivity

Quantum computing is poised to transform fields as diverse as materials science, pharmaceuticals, and secure communications. But the path to practical, commercially relevant quantum systems is defined by a central engineering challenge: scalability. The real value of quantum computing will be unlocked with large-scale, performant systems. For quantum technologies to move beyond small-scale demonstrations and into real-world deployment, architectures must be designed not only to scale up—by increasing the density and performance of quantum modules—but also to scale out to enable networking across distributed systems.

A commercial quantum challenge: Logical qubits at scale

Quantum computers work with qubits as their foundational computation unit. There are multiple kinds of physical qubits, and each has its own benefits and challenges. To be useful for quantum computing, they need to be connected to each other and controlled, to run algorithms. Due to the inherent instability of physical qubits, they must be combined into fault-tolerant structures known as logical qubits, which serve as the fundamental computational units for quantum computers.

The overhead of error correction is significant. Depending on the chosen code and error rates, hundreds or even thousands of physical qubits may be required to create a single logical qubit. This means a large fraction of available qubits must be dedicated to error correction rather than their primary role—executing quantum algorithms. Scalability is therefore a central challenge not only because error correction consumes so many resources, but also because most practical quantum algorithms demand millions of qubits. When so many qubits are tied up in error-correcting duties, achieving the scale needed for useful computation becomes even more difficult.

Commercially relevant quantum applications—such as drug design, material science, and portfolio optimization—will require systems with at least 400 to 2,000 application-grade logical qubits, a number likely to increase as use cases evolve and quantum capabilities are applied to novel applications. Achieving this scale is not simply a matter of adding more physical qubits. It demands efficient error correction, high-fidelity qubits, and architectures that can grow in both density and connectivity.

A scalability imperative for quantum engineering

The quantum computing community has realized that increasing the number of qubits within a single device is necessary, but not sufficient for practical utility. Monolithic scaling faces physical and fabrication limits, including thermal management, error rates, and integration complexity. Moreover, optical interconnects are essential because there is no ability to transmit quantum information through copper wires at room temperature, and telecom fiber offers the simplest way to link quantum modules.

This dual requirement—scaling up and scaling out—is driving a wave of innovation in quantum photonics, where the interplay between qubit platforms and photonic integration is central to system design.

A modular approach for quantum system engineering

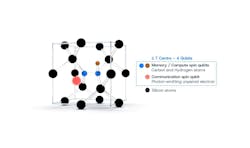

Photonic Inc.’s Entanglement First architecture is based on a modular approach for quantum system engineering. At its core, our architecture is based on a unique qubit modality, the silicon T center. This hybrid approach combines the best of silicon spin qubits and silicon photonics by leveraging the strengths of both platforms: the stability and scalability of silicon spin qubits, and the connectivity and room-temperature transmission of telecom photons. It also aligns with existing optoelectronic infrastructure, which enables integration with classical networks and data center environments.

Each T center within Photonic’s architecture is directly connected to the network and creates any-to-any connectivity. This high connectivity of physical qubits enables photons to act as “quantum messengers” between qubits. The photons generate entanglement—the quantum phenomenon in which two particles share a linked state regardless of distance—to create the connection between qubits in spatially separated T centers. This is notably different from the pure photonic qubit approaches in which the photon is the “quantum message,” where the quantum information is encoded directly within the photon itself.

High-density and high-connectivity design

The integration of photonic cavities, waveguides, and superconducting nanowire single-photon detectors (SNSPDs) onto silicon photonic integrated circuits (PICs) is central to the architecture’s performance. T centers are hosted within photonic cavities which enhance photon emission rates, improve photon collection efficiency, and reduce crosstalk, while waveguides and switches enable dynamic routing of entangled photons on-chip. SNSPDs, known for their efficiency and low dark count rates, ensure accurate detection and heralding of entanglement events. Off-chip routing is achieved by interfacing on-chip waveguides to optical fiber, which is then used for routing photons through switches between the modules.

This high-connectivity design supports both on- and off-chip quantum operations, which allows modules to be flexibly interconnected. The modularity enabled by the architecture simplifies scaling—rather than building ever-larger monolithic quantum processors, multiple modules can be linked across telecom fibers to support both quantum computing and quantum networking applications.

Error correction and fault tolerance: Leverage connectivity

Quantum systems are inherently sensitive to noise and operational imperfections. Achieving fault tolerance requires simple, fast, and efficient sophisticated error-correcting codes, which traditionally require large numbers of physical qubits per logical qubit. Our architecture enables a significantly more efficient type of error correction known as quantum low-density parity check (QLDPC) codes. It requires far fewer physical qubits for the same level of error correction compared to traditional approaches.

This efficiency is only possible because of the architecture’s any-to-any connectivity, which removes the constraints of nearest neighbor connections required by other codes. By distributing and consuming entanglement between qubits, the system supports advanced error correction methods essential for reliable, large-scale quantum computation.

Modularity, connectivity, and error correction

As quantum technologies continue to evolve, engineering choices around modularity, connectivity, and error correction will be critical to achieve the full potential of quantum technologies.

The journey to commercial-scale quantum systems is defined by the ability to scale—both up and out. By focusing on high connectivity, easy manufacturability, and data center compatibility via treating photons as the backbone of quantum communication, modular architectures like ours are helping bridge the gap between small-scale systems and full-scale commercial deployment. Photonic’s modular architecture also meets the requirements for quantum repeaters for long-distance communication of quantum information and scalable quantum networks.

About the Author

Cicely Rathmell

Cicely Rathmell, MSc, is the director of product marketing for Photonic Inc., and based in Dunedin, FL. She previously served as vice president of marketing for Wasatch Photonics from 2016 through 2025.