Acousto-optic beam-steering chip unleashes LiDAR in tiny footprint

A new type of light detection and ranging (LiDAR) system invented by Mo Li, an electrical engineering and computing and physics professor and head of the Laboratory of Photonic Systems at the University of Washington (Seattle, WA), and his team is poised to shake up what’s possible within the LiDAR realm (see video).

The team built a laser beam-steering device with no moving parts and put it on a chip, which makes it 1000X smaller than other LiDAR devices currently available. Putting it on a chip also makes the device compact and sturdy, as well as relatively easy and inexpensive to fabricate.

“We started the project with support from the National Science Foundation’s Convergence Accelerator program,” says Li. “Our goal was to address quantum computing scalability by innovating a chip-scale, multibeam optical control system that empowers cold-atom quantum computing with thousands of qubits. Midway, we realized the integrated beam-steering device we developed is ideal for nonmechanical scanning for LiDAR. This realization inspired all of our following work with LiDAR, which took us merely nine months to complete.”

Design work

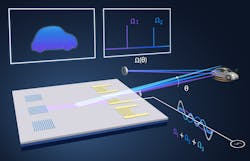

The team’s LiDAR device involves cool design work that combines optics and acoustics for beam steering—guiding a scanning laser beam by sending sound wave pulses with a very high frequency of a few gigahertz (inaudible) across the surface of the chip.

In terms of quantum physics, the sound waves’ particles (phonons) collide with the laser’s beam’s particles (photons) on the chip’s surface—to combine their energy.

“Manipulating the interplay between sound and light on a tiny chip is like orchestrating a light show,” says Bingzhao Li, a postdoctoral scholar. “By tuning the tone of the sound, we can direct light in any desired direction. We extended the utility of the phenomenon to create a LiDAR—a critical technology to enable autonomous vehicles and one that can potentially impact our daily lives.”

On the transmitter side, “we generate acoustic waves traveling on the surface of a chip to scatter light into space,” Mo Li explains. “Since the scattering angle depends on the acoustic wave frequency, we can steer the scattered beam simply by changing the driving frequency used to excite the acoustic waves.”

To render the images, the team taps Brillouin scattering. The laser beam’s photons are steered at different angles and labeled with unique frequency changes, which means only one receiver is needed to decode information sent back to the device from the scanning laser beam.

“The frequency of the light scattered by acoustic waves is shifted up exactly by the frequency of the acoustic wave, so the scattered light in different angles has slightly different frequencies within the gigahertz range,” Mo Li says. “When we measure the reflected light for LiDAR imaging, the receiver only needs to determine the reflected light frequency and calculate the angle of its source. In this way, a single coherent receiver can construct the image of the reflecting object. This frequency domain method dramatically reduces the complexity of the receiver in a LiDAR device.”

And the team’s receiver “only needs a single imaging pixel, rather than a full camera, to image objects far away,” points out Qixuan Lin, a graduate student who did a lot of the team’s experimental work.

“It’s truly impressive how acousto-optic beam-steering technology perfectly caters to the requirements of solid-state LiDAR,” she adds. “And thanks to the physics of light-sound interaction, we can ‘see’ the world by reconstructing a 3D point cloud in the frequency domain. I was amazed to see that a fingernail-sized chip can easily image objects across the lab.”

No moving parts is a big LiDAR advance

Scanning laser light without moving parts is critical to the robustness and longevity of a LiDAR system and lowering its cost, so the team’s work eliminating them is a big advance for LiDAR.

“Our acoustic wave generated on the surface of an optical waveguide chip scatters light to different angles by varying the frequency of the acoustic wave,” says Mo Li. “And the transducer we used to excite the acoustic waves, which is also the technology behind wireless signal filtering for mobile phones, is fully integrated on-chip—absolutely no mechanical, moving parts are involved.”

One of the biggest challenges for the team was they conceived the idea and verified the theory during the COVID-19 lockdown, when the lab was closed. “Frankly, after that, there haven’t been major challenges achieving our proof of concept,” adds Mo Li. “There are bigger challenges moving forward, including increasing the performance, lowering the cost, and full integration of the system.”

As a machine perception technology, LiDAR has the advantage of direct, long-range 3D imaging plus velocity measurement, compared to cameras and radars. LiDAR shows tremendous potential in applications such as autonomous vehicles (industrial/military/consumer), robotics, privacy-sensitive security and surveillance, logistics, and many more.

Interestingly, the U.S. Defense Advanced Research Projects Agency (DARPA) also funded the team to develop a similar device for free-space optical communications between satellites so they can transmit data between them using laser beams.

“But the adoption and proliferation of LiDAR critically depends on how low its cost can be driven,” says Mo Li. “If LiDAR can become very affordable, many more application scenarios will emerge.”

Startup ahead?

What’s next for the team? They’re currently working to improve the performance of their LiDAR device by more than doubling its scanning distance from 115 meters to 300 meters. This is key because safety regulations for autonomous vehicles require the beam to reach between 200 to 300 meters—the distance a vehicle traveling faster than 60 mph needs to safely turn or stop to avoid crashing.

“Our technology makes a truly solid-state and fully integrated LiDAR system possible within the near future, which is extremely important to make LiDAR affordable, robust, and ultimately ubiquitous,” says Mo Li.

They’re working with the University of Washington’s tech transfer office, Comotion, to develop a commercialization plan and considering launching a startup.

“Our LiDAR technology is dramatically different than existing ones and has numerous advantages, so I’m optimistic it’s ready to take off for commercialization,” says Bingzhao Li. “There’s a lot of enthusiasm about our technology, and we’ve been approached by many interested parties. We’re raising resources to support our venture and expect to build a viable prototype within a year that’s ready for road testing, which will be our first milestone.”

FURTHER READING

B. Li, Q. Lin, and M. Li, Nature (2023); https://doi.org/10.1038/s41586-023-06201-6.

See new.nsf.gov/funding/initiatives/convergence-accelerator.

About the Author

Sally Cole Johnson

Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.