Fiddler crabs’ compound eyes inspire amphibious panoramic camera

Fiddler crabs’ compound, periscope-like eyes on a stalk possess exceptional panoramic imaging capabilities, allowing them to see objects both on land and underwater. This inspired a team of researchers from Korea and the U.S. to create a microlens with a flat surface and design an artificial vision system capable of panoramic, omnidirectional imaging within aquatic and terrestrial environments (see video below).1

Significant bio-inspired advances have already been achieved for artificial vision systems, but until now they weren’t suitable for imaging both aquatic and terrestrial environments. They were limited to a hemispherical (180-degree) field-of-view (FOV) angle, which isn’t enough for full panoramic vision or changing external environments.

As such, the team set out to develop a 360-degree FOV camera (see Fig. 1) to image both on land and underwater like a fiddler crab, which owes its special vision to flat optics and a 360-degree observable area.“A fiddler crab lens has a flat cornea and graded index for amphibious imaging,” explains Young Min Song, a professor in the School of Electrical Engineering and Computer Science at the Gwangju Institute of Science and Technology in Korea. “If you use a conventional lens with curvature for imaging, its focal point changes when you dip it into water. But if you use a lens with a flat surface, you see a clear image regardless of ambient conditions.”

Creating a microlens with flat optics

Since no microlens with a flat surface existed for their vision system, the researchers created one by imitating a fiddler crab’s sophisticated lens.

Frédo Durand, a professor of electrical engineering and computer science at the Massachusetts Institute of Technology’s Computer Science and Artificial Intelligence Laboratory (CSAIL), is fascinated by the diversity of optical designs within the natural world. “Amphibian creatures are particularly exciting because they need to operate in two environments with vastly different optical characteristics,” he says. “It’s humbling to learn from nature how to address these challenges.”

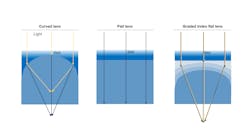

Most lenses do heavy lifting at the interface between air and glass using Snell’s law to bend light rays. “But this doesn’t work when you need to deal with both air and water because the indices of refraction are so different and the bending wouldn’t be the same,” Durand says. “In contrast, our optical design bends light inside the volume, not at the surface.”

To build their lens, the team used multiple coating processes of polymeric materials with different refractive indices. They created a completely flat surface with a graded index via an optimized process condition via trial and error (see Fig. 2). “The coolest part is that our lens has similar characteristics to the natural crab eye lens,” says Song.In the fiddler crab’s eye, the diameter of their microlens is about 20 µm. For this reason and to confirm the universality, the team fabricated microlens arrays with three different diameters—17 µm, 80 µm, and 400 µm.

“These arrays all have very uniform geometry in terms of diameter, radius of curvature, height, etc.,” says Song. “It means our multiple spin-coating process can be used for various shapes of microlens arrays.”

Crab-eye camera

After creating their microlens, the team built a camera consisting of an array of flat microlenses with a graded refractive index profile that they integrated into a flexible comb-shaped silicon photodiode array and mounted onto a spherical structure (only 2 cm in diameter), making it compact and portable.

“Our initial thought for realizing 360-degree FOV was the use of Kirigami—cutting and folding of membranes enables wrapping any type of 3D structure,” Song says. “For example, we could obtain very tiny 3D ball-shaped structures with a high-density microlens array from the geometry we used.”

But for camera manufacturing, they had to integrate photodiode arrays and microlens arrays. “It’s difficult to fabricate small and high-density photodiodes at the laboratory level, and many electrical lines for data acquisition prevent the configuration of high-density integration,” says Song. “As an alternative approach, we used a comb-shaped geometry.”

How does data get processed? “Since our camera has photodiode arrays, our electrical metal lines connect photodiodes and the data acquisition board,” says Song. “Because we only have a small number of pixels compared to conventional cameras, we use simple line art patterns (square, circle, etc.).”

Putting the crab-eye camera to the test

To put the system to the test, the team ran optical simulations and imaging demonstrations in air and water.

When they performed the amphibious imaging by immersing the device halfway in water, they were thrilled to see the system’s images clear and free of distortions. And they proved that the camera’s panoramic visual field, 360 degrees horizontally and 160 degrees vertically, works in both air and water. To do this, the team made a laser illumination setup with multiple laser spots at different angular positions.

“We experimentally demonstrated our camera can see underwater,” says Song (see Fig. 3). “Optical simulation reveals that if you use the conventional microlens arrays (with curved surfaces), we cannot obtain a clear image inside the water or on land due to the focal point mismatch. On the other hand, our flat-surface microlens enables us to capture clear images on both sides.”This is the world’s first demonstration of both amphibious and panoramic vision systems, to the best of the team’s knowledge. And it may open the door to 360-degree omnidirectional cameras for virtual- or augmented-reality applications, small robots that need to operate both in and out of water, or all-weather vision for autonomous vehicles.

Timeline

The team’s crab-eye camera work is still in the early stages and several challenges remain. One of the biggest is that flexible electronics technologies like Kirigami aren’t currently compatible with conventional CMOS technologies.

“But major electronics companies are very interested in curved image sensors, and researchers and engineers could find solutions to plug our new concepts into their camera systems in the near future,” says Song.

Other challenges include light adaptation, resolution, and image processing.

What’s next? The team plans to continue studying the natural eye to understand how animals see the world, and will continue exploring other types of vision.

“We’re learning from insects’ eyes to create lenses with a large field of view and high resolution in a compact form factor,” says Durand.

REFERENCE

1. M. Lee et al., Nat Electron, 5, 452–459 (2022); https://doi.org/10.1038/s41928-022-00789-9.

About the Author

Sally Cole Johnson

Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.