Photonics offers a solution to latency issues for AI

Existing technologies and computers aren’t able to keep pace processing the sheer volume of data the world is generating. This inspired a group of researchers led by IBM Research Europe, in Switzerland, to look into alternative computer paradigms—and completely rethink how they should work.

To do this, IBM started working on the concept of in-memory computing.

How is in-memory computing different than standard computing? “In-memory computation occurs at locations where the data is stored,” explains Ghazi Sarwat Syed, IBM Research Europe staff member. “At no point does data move between memory and a logic unit, which creates bottlenecks. In-memory computing allows us to avoid bottlenecks by computing within the memory.”

Moving beyond electronics to photonics

The group has researched integrated in-memory computing technology for several years, and demonstrated the world’s first fully integrated in-memory computing chip based on nonvolatile phase-change memory devices in 2021.

“In parallel with efforts within the electronic domain, with our academic collaborators at the University of Oxford, University of Manchester, and University of Exeter, we initiated an investigation into photonic in-memory computing to assess the potential for more efficient and faster computing,” says Abu Sebastian, distinguished research staff member and manager of the research program for IBM Research Europe.

“Our idea was to do what we were doing within the electronic domain—but with light instead of electricity,” says Syed. “We overcome the latencies notorious within the electronics domain by the physical loss and delays of electricity, which are bottlenecks to speed, with photons.”

The electronic domain relies on electricity, or fermions, which are fundamental particles, a.k.a. electrons. Fermions interact with each other as they pass through a copper wire, so they scatter and generate heat, causing latencies or lag times for computing.

But the photonics realm involves bosons, a different type of particle. Bosons don’t interact with each other at different frequencies and interact only marginally with most materials they pass through.

“Photonics doesn’t experience the latencies you get within the electronic domain,” says Syed. “And since the wavelengths aren’t interacting with each other, multiple wavelengths carrying lots of information can flow through a common channel at once.”

IBM researchers and their academic partners are embracing the concept of photons at multiple wavelengths going at the same time, “but to compute,” adds Syed. “Each wavelength will perform a set of computations and with multiple wavelengths within the same time instant, so you’re performing multiple computations at the same time. This is wavelength-division multiplexing, which isn’t possible in a straightforward way in electronics.”

Optical computing for AI

The second piece of the researchers’ work focuses on deep learning applications and involves inputs of light at different wavelengths.

One of the biggest challenges was figuring out how to generate light on-chip. While interest in optical computing got its start back in the 1960s, the field experienced a renewal with the advent of integrated photonics—essentially, the optical analog of the electronic silicon integrated circuit.

Working with silicon photonics involves silicon waveguides. “Silicon can’t generate light,” notes Syed. “It can sense but not efficiently generate it. So the first thing we did was to find a component to generate light on-chip, which is a silicon nitride (SiN) optical frequency comb.”

Another challenge was building a crossbar with photonic elements. A crossbar is common within the electronic domain—with a topology of memories. “The advantage of a crossbar is it’s insanely dense,” Syed says.

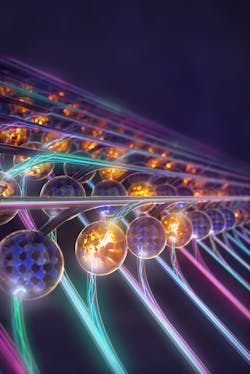

Theirs is designed within the optical domain of a crossbar and combined with optical combs, which results in very low latencies for computations (see Fig. 1). This was implemented with academic collaborators at the University of Oxford, University of Muenster, Swiss Federal Institute of Technology, and the University of Exeter.

“The throughput is light,” explains Syed, “and in between is a black box, which in our case is a photonic memory crossbar made of waveguides and all-optical components.”

This crossbar is special because a germanium-antimony-tellurium (GST) phase-change material sits at every junction and stores the value of an optical matrix with multiple elements (see Fig. 2). “The storage is nonvolatile, meaning if I store this element within the phase-change material it will stay there,” notes Syed. “And it doesn’t need a constant supply of energy to maintain a certain element weight, it’s fixed.”Multiple inputs come in via the crossbar, and then there’s output. “The beauty of our project is we achieve this by combining two different modules—one component is the photonic memory crossbar, which does the optical matrix part, and the optical combs are an on-chip source of light,” he says. “This is a big advance because a huge challenge for photonics is generating and computing with light on a chip.”

By using a single optical comb to generate multiple lights, they can get around 200 individual wavelengths at the same time, which serves as the input to the matrix.

Surprises along the way

The biggest surprise for the researchers was things worked the way they’d imagined. “We were amazed by the throughput and the speed,” says Sebastian.

The group achieved a throughput of around 2 TOPS, which means two trillion multiply accumulate operations per second. This result is striking because it was accomplished at a whopping 14 GHz input/output speed, with a crossbar encoding a tiny matrix—the number of operations scale with the matrix size. “A laptop or your mobile phone works at 1 GHz, or 1 billion operations per second,” he adds. “We’re talking about trillions of operations per second without the chip ever becoming heated.”

And they reach this speed via a single crossbar. “Right now, it’s the speed of deep learning in AI,” Syed says. “You can buy activation-specific accelerators to speed some AI tasks, but they use tens of computational cores to achieve the same throughput.”

Autonomous applications ahead

Self-driving vehicles are one obvious application in need of fast speeds and low latencies for image recognition tasks. “If your car is looking at an image possibly in the road ahead of you, you want it to process it as fast as possible,” Sebastian points out. “You don’t want any latencies. And typically the images self-driving cars capture are large in size, so you’ll want a photonic computer to do this.”

Along the same lines, photonic computers can be used for machine language translation or natural language processing. “Companies can make predictions about things like what movie you’ll want to watch next,” he adds, “and it can be done much faster with photonic computers than now.”

How long before we see photonics-based computers for AI? “We’re still in the evaluation phase of innovation,” Syed says.

It’s important to note IBM Research isn’t alone within this realm—many startups are exploring photonics-based computers for AI, and some already have off-the-shelf products. One company in France, Light On, was able to integrate an optical computer into an AI supercomputer in December 2021.

What’s next?

IBM Research is exploring other interesting computational problems within the optical domain—like how to find the best timetables for planes or even exploring complex protein folding and how its structure changes.

“It takes a long time to compute these things today, even on supercomputers. Our hope is to assess such computational tasks using photonics,” says Syed. “Another direction we’re looking into is applications for computation of live data, which involves performing statistical operations for processing big data to see how we can best leverage photonics.”

About the Author

Sally Cole Johnson

Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.