‘DM-PCSEL’ light source permits miniaturization of 3D LiDAR systems

Smart mobility, or the autonomous motion of robots and vehicles, will be vital in the nascent era of Society 5.0, which is a future smart society envisioned by the government of Japan. And sensors to serve as “eyes” to detect the positions and distances of surrounding objects—light detection and ranging (LiDAR) systems—will play a big role in achieving smart mobility.

LiDAR systems illuminate objects with laser beams, then calculate the distance of those objects by measuring the beams’ time of flight (ToF), which is the time it takes for light to travel to these objects, be reflected, and return to the system.

“Currently, most LiDAR systems under development are mechanical beam-scanning types,” says Susumu Noda, a professor in the Department of Electronic Science and Engineering and the Photonics and Electronics Science and Engineering Center at Kyoto University. “To scan the beam, these systems rely on moving parts such as motors, which make the systems bulky, expensive, and unreliable. To overcome such issues, it’s very important to develop nonmechanical LiDAR systems.”

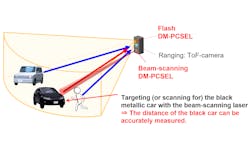

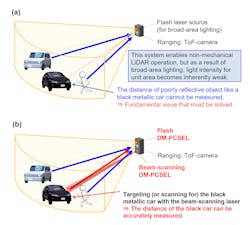

One type of nonmechanical LiDAR system, known as flash LiDAR (see Fig. 1a), simultaneously illuminates and evaluates the distances of all objects within the field of view with a broad and diffuse beam of light, allowing for a fully three-dimensional (3D) image to be obtained in a flash.

But flash LiDAR systems come with a serious limitation: they can’t measure distances of poorly reflective objects like black metallic cars, due to the very small amount of light reflected from these objects. And external optical elements such as lenses and diffractive optical elements are required to obtain the flash beam, which means these systems must be very large—another critical limitation.

“These issues holding back individual mechanical beam-scanning LiDAR and flash LiDAR systems inspired our work to search for a solution,” says Noda.DM-PCSELs

To address these critical LiDAR limitations, a team of researchers led by Noda at his Quantum Optoelectronics Lab developed a new type of light source, which they call a “dually modulated photonic crystal surface-emitting laser (DM-PCSEL),” for use in a compact 3D LiDAR system (see video).

“We can achieve not only flash illumination, but also electronically controlled two-dimensional (2D) beam-scanning illumination—scanning of a more concentrated laser beam—without relying on any of the bulky moving parts or bulky external optical elements,” Noda says.

The team put these DM-PCSELs into a 3D LiDAR system so they could measure the distances of many objects all at once by wide flash illumination, while also selectively illuminating poorly reflective objects with a more concentrated beam of light when they’re detected (see Fig. 1b).

“By using a combination of software and electronic control, we can automatically track the motion of poorly reflective objects by beam-scanning illumination,” says Noda. “Our DM-PCSEL-based 3D LiDAR system allows us to range not only highly reflective objects, but also poorly reflective ones simultaneously.”

In other words: the researchers established a new concept of nonmechanical 3D LiDAR, which they believe will help enable smart mobility in the era of Society 5.0.

“Our work is being conducted under Japanese national projects Core Research for Evolutional Science and Technology and Cross-ministerial Strategic Innovation Promotion Program for photonics and quantum technology for Society 5.0, which will help support Society 5.0,” says Noda.

Unifying flash and beam-scanning LiDAR systems

The team developed two types of DM-PCSELs: one emits a broad and diffuse beam of light for flash illumination, while the other emits more concentrated beams of light for selective beam-scanning illumination.

To do this, they “loaded both of our light sources into a single 3D LiDAR system so we could measure the distances of many objects all at once by wide flash illumination, while also selectively measuring the distance of poorly reflective objects detected in a camera image (see Fig. 1b),” explains Noda.

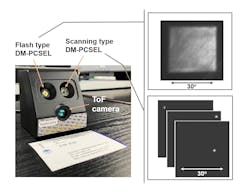

A photo of their 3D LiDAR system—containing both flash and beam-scanning DM-PCSELs (see Fig. 2)—shows how the DM-PCSEL flash source illuminates a wide 30° × 30° field of view, and the DM-PCSEL beam-scanning source illuminates small spots via a selection of one hundred narrow laser beams (the spread angle of a single beam is <1°).ToF distance measurements are captured by a ToF camera installed near the bottom of the system. “These lasers, the ToF camera, and all associated components required to operate the system are assembled in a compact manner—resulting in a total system footprint smaller than a business card,” says Noda.

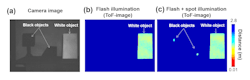

To get an idea of how their 3D LiDAR system works, imagine the following scenarios: “First, suppose a highly reflective white object and two poorly reflective black objects exist simultaneously within the camera’s field of view, as shown in the black and white image of Fig. 3a,” says Noda. “If we attempt to measure the distances of these objects using only the flash laser source, we can only measure the distance of the white object, as shown in the distance image of Fig. 3b; black objects can’t be measured due to the small amount of light reflected from these objects. But we can recognize the presence of these black objects by simply looking at the black and white image in Fig. 3a.”So the team turns on the beam-scanning laser source to illuminate these objects with beams of light more concentrated than the flash laser source. By doing so, a sufficient amount of light is reflected from these objects, enabling the measurement of their distances (see Fig. 3c and video below).

“In a dynamic, real-world scenario, it’s quite likely these black objects have places to be and will be in motion,” says Noda. “To keep track of their motion in real time, we use a software program and electronic control we developed to allow us to automatically recognize the poorly reflective objects and measure their distances by selective illumination.” (See video below.)

The team says the coolest aspect of their work is that it’s unusual for a light source to attain the high-level functionalities of flash illumination and 2D beam-scanning illumination without the use of external optical elements or mechanical moving parts. These capabilities enabled them to unify the individual merits of both flash and beam-scanning LiDAR systems.

“While this wasn’t the focus of our present work, our DM-PCSELs are also capable of emitting various other beam patterns, such as multiple dots for face recognition and even detailed images of logos and artwork,” Noda adds.

As far as applications, their LiDAR system can be applied to “smart mobility, such as the autonomous movement of robots, vehicles, and farming and construction machinery for Society 5.0,” says Noda. “With this system, robots, vehicles, and machinery will be able to reliably and safely navigate a dynamically changing environment without losing sight of poorly reflective objects, which is essential for safe autonomous driving.”

Next up, the team plans to use their DM-PCSEL-based LiDAR systems for practical applications such as the autonomous movement of robots and vehicles—all necessary components and control units are already compactly built into the system and the necessary software is available.

“In the future, we’re considering replacing the ToF camera of our LiDAR system with a more optically sensitive single-photon avalanche photodiode array to measure objects across even longer distances,” says Noda. “We expect our DM-PCSEL technology to lead to the realization of on-chip, all-solid-state 3D LiDAR.”

FURTHER READING

M. De Zoysa et al., Optica, 10, 264–268 (2023); https://doi.org/10.1364/optica.472327.

About the Author

Sally Cole Johnson

Editor in Chief

Sally Cole Johnson, Laser Focus World’s editor in chief, is a science and technology journalist who specializes in physics and semiconductors.