Image-guided Surgery: Photoacoustics provides critical tissue differentiation at depth

Surgery is inherently risky for a number of reasons. Among these is collateral injury to blood vessels and nerves obscured by other tissues—which at its worst can result in paralysis or death.

To avoid such outcomes, surgeons have tried various intra- and pre-operative imaging modalities including endoscopy, computed tomography (CT), magnetic resonance imaging (MRI), and ultrasound. Each of these image-guided surgery methods has limitations and risks, though, which is why Muyinatu A. Lediju Bell of Johns Hopkins University has investigated the use of photoacoustics for this purpose. Bell is an assistant professor of electrical and computer engineering with a joint appointment in the biomedical engineering department and director of the Photoacoustic and Ultrasound Systems Engineering (PULSE) lab.

Why photoacoustics?

Because light scatters in tissue, other optical approaches can image at a depth of only about 1 mm. By contrast, photoacoustic (PA) (also known as optoacoustic) imaging uses light to produce acoustic responses from several centimeters deep into the surgical site.

PA leverages the fact that pulsed laser light emission causes thermal expansion in tissue, which generates sound waves. Because blood vessels and nerves absorb more light at specific wavelengths than surrounding tissues, PA provides high-contrast images of vessel and nerve locations. It can even allow discernment between vessels and nerves themselves, because these two types of structures have different peak optical absorptions. Thus, it is possible to visualize one or the other in a single image—and may someday allow discernment of the structures in vivo.

The latest publication of the PULSE lab, done in conjunction with researchers at University of Virginia and Smith College, reports the application of PA for real-time surgical guidance.1 Because robotic surgeries are no less immune to inadequate views of subsurface anatomy than are standard operations, the team has researched application of PA not only to manual surgeries but also to operations done using Intuitive Surgical’s da Vinci Surgical System (and they say that the approach would work with any robotic surgery setup).

PA works best for surgical guidance when the optical delivery systems and acoustic receivers are separated. The group’s prior neurosurgery research showed that surrounding surgical tools with optical fiber allowed PA to image blood vessels through bone, so long as a standard transcranial ultrasound probe was available to receive the signal. In this type of setup, a dedicated navigation system can potentially control alignment of the optical and acoustic components. In the robotic setup, they demonstrated full integration, adding real-time PA imagery to simultaneous endoscopic video shown in the da Vinci master console.

Operations and outcomes

Success with low-frequency ultrasound was a primary goal for the researchers because conventional PA tomography and microscopy systems use high-frequency transducers. Their work, in fact, is apparently the first to demonstrate the feasibility of vessel visualization and automated target separation measurement for surgical guidance when the light source is separated from an external, low-frequency, large-aperture transducer able to image deep into tissue. So while they chose an external probe with a bandwidth of 3 to 8 MHz, they say that higher frequencies will also work when the probe (linear, curvilinear, phased array, or other) is placed at the surgical site.

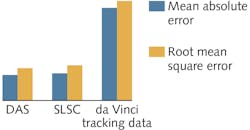

The researchers’ results indicate that PA provides submillimeter mean-absolute and root-mean-squared errors (MAE and RMSE) for determining the space between important "landmarks" in the surgical site, thus potentially allowing maximal surgical workspace with minimal damage. Further, PA-based measurements proved more reliable than those generated by image-based tracking data obtained from the da Vinci kinematics (see figure). Although the PA-derived measurements require postprocessing, while those obtained from tracking data do not, the increased accuracy is compelling. That said, the researchers’ second set of experiments showed that postprocessing and separation calculations may not always be necessary.

The researchers’ results are promising for real-time guidance for both robotic and nonrobotic surgery. Integrating PA into surgical workflows has a few clinical implications, including assurance for the surgeon regarding extent of available workspace, and the ability to indicate where (and where not) to operate. Integrated into a robotic system, PA can provide quantitative information about the center of the safety zone, which helps the surgeon understand vessel locations in relation to a robot-held surgical tool. And an image-based coordinate system in a nonrobotic setup can similarly enable orientation if the tool tip is visible in the PA image.

REFERENCE

1. N. Gandhi et al., Biomed. Opt., 22, 12, 121606 (2017).

About the Author

Barbara Gefvert

Editor-in-Chief, BioOptics World (2008-2020)

Barbara G. Gefvert has been a science and technology editor and writer since 1987, and served as editor in chief on multiple publications, including Sensors magazine for nearly a decade.