Ophthalmology/Functional Imaging: OCT + AO for functional imaging

Retinal imaging has come a long way since Allvar Gullstrand’s 1911 Nobel Prize for, among other things, the fundus camera. Optical coherence tomography (OCT) and adaptive optics (AO) have made enormous contributions, and a recent development incorporates both methods, along with a scanning laser ophthalmoscope (SLO), into a single system.

Transition to functional imaging

A team led by Ravi Jonnal from the University of California, Davis (UC Davis) integrated an AO-enhanced SLO with high-scan rate OCT to expand the range of applications for retinal imaging (see Fig. 1).1,2 SLO and OCT systems have complementary capabilities. SLO images the retinal surface at high resolution while OCT provides an image of structures within the retinal volume, but at a lower resolution. Mehdi Azimipour, a researcher at the UC Davis Eye Center (Sacramento, CA), says one of the first motivations for combining the systems was to get confidence that the AO was indeed optimally correcting for eye aberrations. OCT is a computationally intensive process, so the image quality can’t be evaluated quickly, while SLO provides real-time imaging.

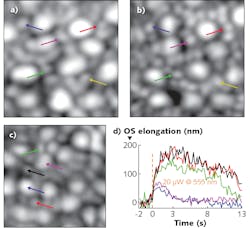

Combining the two modalities also extends the possibility for functional—rather than strictly anatomical—imaging of retinal structures. That’s because it now appears as if the process of light detection involves an “elongation” of the outer segment (OS) of individual photoreceptors. This had been suspected some time ago, when researchers observed “blinking” of individual photoreceptors in response to visible light in flood illumination. That blinking was attributed to interference between light reflected from both the fixed and elongating portions of a photoreceptor. It’s since been verified with direct OCT measurements of OS elongation in cones.

Rods, however, are smaller, and OCT cannot always distinguish a single rod from a small clump, or even rods from cones. Registering the SLO and OCT images provides reliable identification of cones. Azimipour and his colleagues have already applied the new system to quantify differences in rod and cone response to illumination, with, as expected, cone response triggered at illumination levels beyond that necessary to trigger a rod response.

Integrating SLO and OCT therefore enables functional imaging of the entire retina. “Now we can attempt to link differences in functional response with distinct disease states,” says Azimipour. For example, he believes this opens the door to identifying an early-stage functional marker for age-related macular degeneration, where early treatment can offer improved clinical outcomes.

Two for 2D

As the UC Davis work illustrates, AO SLO systems can be clinical imagers—but they can also be used for research into human vision. For example, such a system can project images directly onto a single photoreceptor or photoreceptor group. When the system introduces light stimuli at different wavelengths, changes in visual response could be attributed to characteristics of human color vision—if everything else was identical.

But in the human eye, not everything else is identical. Chromatic aberration means the focus at one wavelength, say, 630 nm, is different from the focus at, say, 470 nm. In itself, that’s not an insurmountable problem because the system can refocus as the color shifts, but it does prevent simultaneous optimal focusing at dual wavelengths. The traditional method of compensating is to offset the focus of one color relative to the other by an amount derived from the population average chromatic aberration. By definition, this method isn’t capable of precise individualized control over the projected illumination pattern.

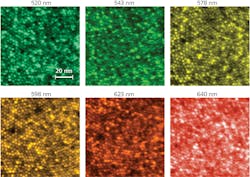

That’s the problem researchers at the University of Washington (Seattle, WA) sought to solve with their recently announced SLO modification. Their innovation incorporated a longitudinal chromatic aberration (LCA) compensator into a SLO to simultaneously capture dual-wavelength 2D retinal images.

The team, led by Professor Ramkumar Sabesan, introduced a Badal compensator into the optical train.3 The traditional Badal optometer consists of a set of mirrors between two adjustable converging lenses. Control over the mirror and lens position allows the operator to introduce a defined relative defocus between the input and output apertures.

By inserting long-pass filters, Sabesan’s team converted a Badal optometer to a dichroic Badal compensator with two partially independent optical paths, each adjusted for a distinct wavelength. The long-pass filters transit 900 nm wavefront sensor illumination at the longest distance, while reflecting visible light along a shorter path.In one set of tests, for example, the system successively delivered different retinal illumination wavelengths to the eye while an operator adjusted the optical path length to optimize image quality at each wavelength for each individual. This “objective” longitudinal chromatic aberration can be directly computed from the difference between the positions where the images were best focused at each wavelength (see Fig. 2). At the same time, individuals were asked to subjectively judge the focus for different wavelengths. The objective and subjective LCA showed remarkable similarity.

“The ability to focus two wavelengths simultaneously on the retina,” says Sabesan, “allows us to study the neural substrates underlying human color vision by circumventing the chromatic imperfection native to each eye.”

REFERENCES

1. X. Jiang et al., Optica, 6, 8, 981–990 (2019).

2. M. Azimipour et al., Opt. Lett., 44, 17, 4219–4222 (2019).

3. M. Azimipour et al., bioRxiv (2019); https://doi.org/10.1101/760306.

About the Author

Richard Gaughan

Contributing Writer, BioOptics World

Richard Gaughan is the Owner of Mountain Optical Systems and a contributing writer for BioOptics World.