PHOTONIC FRONTIERS: GESTURE RECOGNITION: Lasers bring gesture recognition to the home

New laser and optical systems are making gesture recognition a consumer technology. In late 2010, Microsoft (Redmond, WA) brought gesture recognition to video games when it introduced the Kinect motion controller for its Xbox gaming system. Gesture-recognition controls for televisions and set-top boxes using different laser technology debuted in January 2011 at the Consumer Electronics Show in Las Vegas.

Efforts to develop gesture-recognition techniques for human communication with computers go back to the 1990s, but applications have been slowed by the need for expensive optical and electronic equipment and sophisticated computer algorithms. Now improvements in sensors, optical systems, and computer technology have brought gesture recognition to the mass market. Priced around $150, Kinect was a hot seller during the holiday season. It also sparked enthusiasts to launch the OpenKinect project (http://openkinect.org) to develop open-source software to adapt Kinect. Meanwhile, other companies are introducing gesture recognition for control of home televisions and entertainment centers.

Basics and background

Gesture recognition is a complex task, requiring optics to record motion and pattern-recognition software to identify parts of the body, trace their motion, and separate the meaningful gestures from the background environment. Some development has focused on recognizing specific gestures such as sign language. Motion capture has become important in animating computer-generated characters in video games and movies. Typically many cameras track dozens of reflective markers worn by actors, then computers translate the data into motion of stick-figure skeletons and build three-dimensional characters around them. The results are impressive, but the process is very expensive.

Costs are coming down, however. At the MIT Computer Science and Artificial Intelligence Lab (Cambridge, MA), Robert Wang used a $60 webcam to record gestures made by people wearing thin fabric gloves, then translated the gestures for 3D manipulation of computer models.

For consumer gesture recognition, developers are turning to cameras that provide depth information. Stereo cameras viewing a scene from two different angles can reconstruct three-dimensional data, but they require bright light and high contrast between objects in the near and far field. Developers instead are focusing on time-of-flight cameras and structured light, which record 3D profiles of people and objects with light from low-power near-infrared laser diodes, says Sinclair Vass, senior director of marketing and operations at JDSU (Milpitas, CA). Filters block ambient light, so sensors pick up only the laser line, giving a cleaner signal. The two approaches differ considerably in detail.

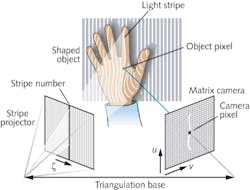

Structured light

One approach, called “structured light,” illuminates a scene with a pattern such as an array of lines, and views the results at an angle (see Fig. 1). If the pattern is projected onto a flat wall, the camera sees straight lines, but if it illuminates a more complex scene, such as a person standing in front of the wall, it sees a more complex profile. Digital processing can analyze profiles across the field to map the topography of the person’s face and body.

Traditionally, structured light projects rectangular grids or arrays of lines, but powerful lasers are needed to provide a high signal to noise ratio. To get good performance in an eye-safe system, PrimeSense (Tel Aviv, Israel) uses a proprietary technique it calls light coding in the optics they supply for Microsoft’s Kinect system. “Our code is very rich in information, with almost zero repetition across the scene. It’s a code, not a grid, and this is what gives us reliability and replicability,” says Adi Berenson, vice president of business development and marketing at PrimeSense.

The illumination laser emits in the 800- to 900-nm range, invisible to the eye but in a range where silicon CMOS detectors have high quantum efficiency. A separate camera records color images. The optics record 640 × 480 pixel images, and depths of 0.8 to 3.5 m. Berenson says the resolution is about 16 times finer than competing time-of-flight systems and the hardware costs only a few tens of dollars. Microsoft software running on the Xbox interprets the raw gesture data and gets the game to respond, typically by having a character replicate the user’s movements on the screen (see Fig. 2). Users report typical response times of several seconds.PrimeSense has made open-source drivers available through the Open Natural Interaction group (http://openni.org) and has big plans for the system. “Our future is natural interaction everywhere. TVs and set-top boxes are a straightforward next step,” says Berenson. Later he envisions applications in mobile devices, domestic robots, automobiles, and industry.

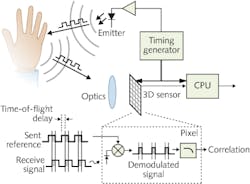

Time-of-flight cameras

A time-of-flight camera works somewhat like a laser radar, with an IR laser firing short pulses and the camera timing the return time from pixels across its field of view. Several companies have developed commercial versions, including 3DV Systems (Tel Aviv, Israel) and Canesta (Sunnyvale, CA), both recently acquired by Microsoft; PMD Technologies (Siegen, Germany); and Optrima, the hardware division of Softkinetic-Optrima (Brussels, Belgium).“We can work with any 3D camera; as long as we get a clean, good-quality depth map, we’re happy,” says Tombroff. Raw images are filtered before software classifies or segments the scene. The software also identifies and removes nonhuman objects, such as plants, chairs, and tables, so it can focus on the main person or persons making gestures. From the positions of the people in the field and view and the depth information, the software calculates locations of arms, legs, shoulders, and hands, then applies that information through a series of frames to recognize gestures with hands and feet, as well as motion such as dancing. The system also reconstructs a stick-figure skeleton, which can be used to animate an avatar on the screen.

The big news from Softkinetic-Optrima is a gesture-recognition system announced last month at the Consumer Electronics Show. It’s designed as a gesture system to work with an Intel-based set-top box and controls displayed on a television screen. “We can do complete navigation, go to menus, click on things, increase volume, close a movie, and navigate through screens,” says Tombroff. It even includes an “air keyboard” so users can enter detailed instructions. “The principle is easy. The trick is to make it smooth, intuitive, and robust,” he said, although users will need a few minutes to accustom themselves to the controls.

Softkinetic-Optrima’s time-of-flight camera also can be used in other applications. One is to provide feedback in computer games developed by the Dutch company Silverfit (Alphen aan den Rijn, the Netherlands), which help elderly people move their limbs to restore the function of damaged muscles; gestures provide feedback. Other possibilities include military simulation systems and a golf-training system that uses a time-of-flight camera to monitor how players swing their shoulders.

Outlook

“Both coded light and time-of-flight are viable, and most likely will coexist,” says Vass. Gaming is “a good strong commercial market, but pretty small relative to other places where this could be used, such as the living room.”

Kinect made a huge splash as the first gesture-recognition system in the consumer market. Video game consoles are a good starting point—their powerful processing chips have the computing power needed to attack the tough computing problems of gesture recognition for the game. Microsoft also tailored dancing and movement games that play to Kinect’s strengths; promotional videos show preteens have a blast. However, gamers say that present response times of several seconds are much too slow for many popular first-person shooter games, which require split-second response times.

Television controls are a potentially huge market that seems a logical next step for gesture recognition. Few channel surfers demand split-second response, and viewers have grown accustomed to on-screen menus. A well-designed gesture response system could be fun and easy to use—as well as saving the nuisance of searching for mislaid remotes. It likely will start as an option on high-end sets, or an accessory for gadget hounds and people who lose remotes.

“The market is really launching now,” says Tomboff. Vass expects gesture controls to go much farther, eventually interfacing with handheld devices, laptops, and miniprojectors to control video conferences.

About the Author

Jeff Hecht

Contributing Editor

Jeff Hecht is a regular contributing editor to Laser Focus World and has been covering the laser industry for 35 years. A prolific book author, Jeff's published works include “Understanding Fiber Optics,” “Understanding Lasers,” “The Laser Guidebook,” and “Beam Weapons: The Next Arms Race.” He also has written books on the histories of lasers and fiber optics, including “City of Light: The Story of Fiber Optics,” and “Beam: The Race to Make the Laser.” Find out more at jeffhecht.com.