Advances in Optics: Observed defects reliably determine laser-damage characteristics

SAM RICHMAN and TREY TURNER

Laser-damage testing is widely used by manufacturers of lasers and laser systems to ensure the reliability of their products. However, there are limitations in traditional laser-damage testing protocols, as well as widespread misunderstanding of how to interpret damage test results. As a result, users of original-equipment-manufacturer (OEM) laser optics often overspecify their optics, which can drive up cost unnecessarily and yet still not prevent damage from occurring under actual use conditions.

This article reviews a recent study that attempts to establish a correlation between observed coating defects and laser damage. Results indicate that it might be possible to develop an alternate methodology for determining damage characteristics, based on observed defects, which is both more reliable and less time-consuming than traditional laser damage testing protocols.

The exact mechanism by which laser damage occurs in optics and thin films depends very much upon the particulars of the operating regime of the laser and the materials involved. For example, there are differences in the damage mechanism between deep-ultraviolet and visible lasers, and between ultrafast and continuous-wave (CW) lasers.

Because Q-switched, solid-state lasers are widely used in industrial and military applications, damage relating to these has a significant economic impact. Therefore, to render this discussion manageable and practical, it will be confined exclusively to Q-switched, solid-state lasers with nanosecond-regime pulse widths, pulse energies of under 1 J, and output in the near-infrared and visible.

Damage-testing background

Most commercial laser-damage testing today is conducted in accordance with the provisions of ISO 21254. This standard specifies that a component be tested at a minimum of 10 test sites at any given single fluence. It also specifies that a sufficiently large range of fluences be used to determine the highest fluence where there is 0% probability of damage and the lowest at which there is 100% damage probability.

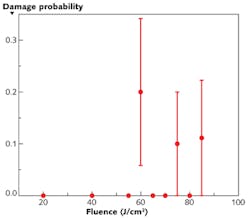

However, it does not dictate the number of fluences that must be used to make this determination. Typically, commercial damage testing uses around five to 10 fluence levels and then plots damage probability as a function of fluence. A best-fit line is drawn through these points, and the value at which it crosses the x (fluence) axis is considered to be the "damage threshold"—that is, the fluence at which the damage probability drops to zero.

While that sounds like a fairly straightforward procedure, a plot (see Fig. 1), which shows the actual laser-damage test results for a batch of optics produced at REO, illustrates the problem commonly encountered with it. Specifically, determining a "best fit" line to this set of points is quite an arbitrary exercise, and there are several higher fluence levels at which no damage is observed, even though some damage was recorded at lower fluences. Furthermore, the testing did not extend to sufficiently high fluences to firmly establish the point at which there is a 100% probability of damage, a situation that occurs commonly in commercial damage testing.The underlying reason for the ambiguity of this data is undersampling. Specifically, it is our contention that laser damage in the operating regime previously defined is primarily caused by coating defects, most typically contaminants. And, on a typical "laser quality" optic, these defects are quite sparsely distributed over the component surface. Given this sparsity, traditional testing methodologies, which make no assumption about what causes laser damage, simply don't sample enough of the surface to give viable statistics.

In addition, coating defects are typically much smaller (in the micron to tens-of-microns size range) than the incident test laser beam. Thus, even when a defect is directly irradiated (unless it's at the beam center), the Gaussian falloff in beam intensity means it is most likely to experience a relatively low fluence, further reducing the quality of the data.

User misconception

Another significant problem with damage test results is that they are frequently misunderstood by users. In particular, a common misconception is that optics will never be damaged if only exposed to fluences below the damage threshold established by testing. Again, the problem is that this number has usually been arrived at by testing a relatively small number of sites and might change substantially if testing covered a greater fraction of the optics' surface.

On the other hand, if damage is due to defects that are sparsely distributed over the optic, then it's quite possible that the component could withstand significantly higher fluences if these "trouble spots" are avoided. Of course, this is highly dependent upon the exact use conditions of the optic.

Finally, conventional testing doesn't quantify or qualify the nature of a damage event; for example, distinguishing between a damage feature that is 2 μm in size vs. one that is 2 mm. Again, depending upon the application, a small damage event might be tolerable, especially if it doesn't grow on repeated exposures to the same fluence.

Designing an experiment

We designed an experiment to test the theory that laser damage is primarily defect-related. Then using this assumption, we attempted to construct a damage-testing protocol based on defect counting that provides a more accurate prediction of actual damage characteristics than traditional methods.

The experiment was executed in two phases. In the first phase, a set of substrates (Set #1) was coated in a chamber that was purposefully past its usual preventive-maintenance limits to produce a comparatively large number of defects. Coating defects on these optics were identified using an automated surface-quality-inspection microscope system, and then damage testing was performed. Specifically, each component was irradiated at a fluence of 30 J/cm2 at 100 different test sites arranged in a uniform array. Each optic was then again imaged by the microscope system, and damage events were identified.

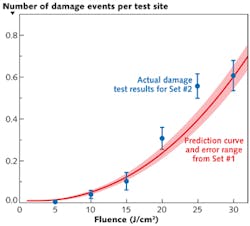

Next, a second set (Set #2) of optics was prepared in exactly the same way as the first. These were damage-tested using traditional protocols and the results compared with the previously developed prediction to verify its accuracy.

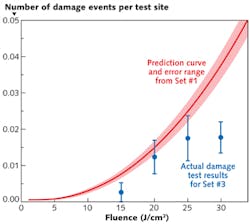

In the second phase of the study, a third set of substrates (Set #3) were coated, but this time in a different chamber designed to produce low-defect, high-quality laser optics. These optics were then subjected to the same surface quality inspection process as the other sets. The results of the surface-quality inspection, together with the model developed in the first phase of the study, were used to predict the damage characteristics of these optics. A traditional damage-test protocol was then used on these optics and the results compared with the prediction.

Results

First, it's worthwhile to note that of the 555 damage events recorded for Set #1, 527 were clearly associated with a pre-existing defect. For the other 28 damage events, subsequent examination of the "before" microscope images virtually always showed that some feature was there; however, these fell below the predefined threshold set in the image-processing software used for automated defect identification. Thus, we can confidently conclude that damage is associated with defects for solid-state lasers operating in the range previously defined.

Figure 2 shows the actual damage results for Set #2 along with the prediction derived from the testing results of Set #1. There is good agreement between the model created from Set #1, which was analyzed using the local fluence at individual defects, and Set #2, which only counted how many damage events occurred at each test site with no consideration of local fluence at all (in other words, the traditional approach).The level of agreement between measured and predicted values here is actually fairly good and indicates that this method shows promise. There are several possible reasons why it isn't even better. First, the sparseness of defects on these higher-quality optics still leads to substantial statistical uncertainties in the measured number of damage events. Second, there are some differences in the defect size distributions between optics sets one and three that could be affecting the prediction. Finally, there may be something fundamentally different in the nature of the defects between these two sets because they were produced in two coating chambers at different points in their maintenance schedules. Possible differences of chemical composition and morphology that could affect their damage characteristics have not been addressed here.

These results also underscore the motivation for studying this low-fluence regime of damage. A standard damage test on one of these optics using only about 10 sites per fluence over a range of, say, 10–80 J/cm2, would probably have indicated a damage threshold greater than 50 J/cm2. And, in fact, it is the results of just such a test on Set #3 that were plotted in Fig. 1. In reality, Fig. 3 shows that there is a nonzero (albeit small) chance of damage down to at least 15 J/cm2.

Conclusion

The ultimate goal of this work is to establish the basis for a method that will enable reliable prediction of laser damage of thin films based solely on automated, non-destructive surface inspection. It is hoped that this approach will be more accurate, faster, and less costly than current laser-damage testing protocols, which rely on a destructive methodology. The results obtained here certainly establish a strong association of damage events with pre-existing defects. Furthermore, the reasonably good agreement between the prediction model derived in this study and the actual laser-damage test results is encouraging and certainly merits further development.

Sam Richman is an R&D engineer and Trey Turner is chief technology officer at REO, Boulder, CO; e-mails: [email protected] and [email protected]; www.reoinc.com.