LIDAR: LIDAR nears ubiquity as miniature systems proliferate

Light detection and ranging (LIDAR, or more commonly now, lidar) has proven to be an invaluable technology to assess the physical distance parameters and three-dimensional (3D) forms of objects for geographical and environmental assessments, infrastructure monitoring of pipeline and power grid facilities, architectural and structuring mapping, oceanographic and archaeological discovery, wind-turbine optimization, and countless more applications. And while legacy laboratory- or benchtop-scale lidar systems are easily carried aboard aircraft and vehicles, miniature and even chip-sized systems are now enabling lidar to accompany unmanned aerial vehicles (UAVs) or drones, small terrestrial/industrial robots, and probably soon an individual's smartphone—ushering lidar into ubiquity.

Joining the ranks of miniature spectrometers, covert cameras, as well as burgeoning smartphone-compatible devices, lidar systems continue to shrink in size—and cost—through optomechanical assembly, light source, and integration advances.

The mechanics of miniaturization

Recognizing that benchtop-sized, tens-of-thousands-of-dollars-priced lidar systems, while offering high-resolution information at long ranges, limit widespread adoption of conventional lidar systems for both commercial and military use, the Defense Advanced Research Projects Agency (DARPA; Arlington, VA) developed its Short-range Wide-field-of-view Extremely agile Electronically steered Photonic EmitteR (SWEEPER) technology that integrates nonmechanical optical scanning technology on a microchip.1

Free of large, slow, temperature- and impact-sensitive gimbaled mounts, lenses, and servos, SWEEPER technology can sweep a laser back and forth more than 100,000 times per second—or 10,000 times faster than current state-of-the-art mechanical systems. Furthermore, it steers the laser beam across a 51° arc, which DARPA says is the widest field of view ever achieved by a chip-scale optical scanning system.

Essentially, SWEEPER uses optical phased-array technology wherein engineered surfaces control the direction of selected electromagnetic signals by varying the phase across many small antennas. At optical frequencies, because optical wavelengths are thousands of times smaller than those used in radar, the antenna array elements must be placed within only a few microns of each other such that manufacturing or environmental perturbations—even as small as 100 nm—do not degrade the array.

But while SWEEPER miniaturizes the optical beam-steering function, many more components are necessary to create a true chip-based scanning lidar system. Towards that end, DARPA's Electronic-Photonic Heterogeneous Integration (E-PHI) program aims to use standard semiconductor manufacturing processes (that solve lattice mismatch issues) to combine antennas, optical amplifiers, modulators, detectors, and even a miniature light source developed by University of California, Santa Barbara (UCSB) researchers through indium arsenide layer growth in the form of quantum dots, to form a complete lidar system (see Fig. 1).2

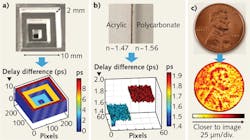

Unfortunately, the status of DARPA's chip-scale lidar, like many U.S. government projects, is on a "need-to-know" basis. However, optical-phased-array technology is also integral to the California Institute of Technology (Caltech; Pasadena, CA) nanophotonic coherent imager—a miniature 3D imager that uses a 4 × 4 array of silicon photonics antennas that couple light from an object (illuminated by coherent light from a 1550 nm laser diode) into nanophotonic waveguide gratings that convert the optical signal (approximately 4 mW per pixel) to phase and intensity information that is used to build a 3D lidar image with a 15 μm spatial resolution at 0.5 m distances (see Fig. 2).3Although Caltech's device contains 16 pixels in a 300 × 300 μm footprint, tens of thousands of these pixels (and a higher-intensity source) would be needed for long-range lidar applications for larger objects.

Light source leaps

Although an autonomous vehicle is certainly large enough to physically accommodate benchtop-sized lidar, extending driverless capability to the masses also depends on cost. To that end, expensive diode-pumped solid-state (DPSS) and fiber laser lidar sources must eventually yield to more compact alternatives such as semiconductor-laser-based flash lidar systems from TriLumina (Albuquerque, NM) using vertical-cavity surface-emitting lasers (VCSELs), standard eye-safe telecom-grade laser diodes as we saw in the Caltech implementation, or even on-chip quantum-dot or other integrated sources being pursued by DARPA.

But just how powerful does a light source need to be to deliver the required ranging sensitivity? Of course, the answer is application-specific. The hundreds-of-milliwatts light source needed to image a penny at 0.5 m is drastically different from the tens-of-watts and even kilowatt sources, respectively, that let an autonomous vehicle see 20 m ahead or an aircraft see a Humvee from 30,000 feet.

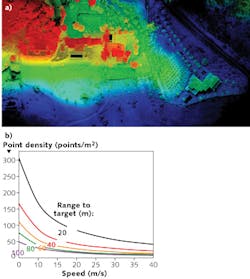

For example, an alternative to optical-phased-array lidar is single-photon lidar, such as the reported 16,384 pixel device developed by MIT's Lincoln Laboratory (Lexington, MA) that can image 600 m2 of a landscape at 10,000 feet with 30 cm resolution.4 Licensed to Princeton Lightwave (Cranbury, NJ) and Spectrolab (Sylmar, CA), the first commercial shoebox-sized, $150,000 units are a far cry from the ubiquitous lidar goal and require sub-kilowatt-level laser sources.

Towards ubiquity

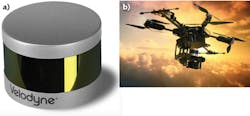

Fortunately, centimeter resolution at tens of thousands of feet addresses a relatively low-volume market and is overkill for many applications such as self-driving cars and low-altitude aerial mapping.Incorporating a 905 nm, class 1 eye-safe laser source, the low-cost Velodyne VLP-16 lidar system follows a trajectory of product development that has enabled lidar system prices to drop tenfold in the past seven years, according to Velodyne director of sales and marketing Wolfgang Juchmann.7 At that rate, will we see another tenfold reduction to $800 lidar in another seven years? Quanergy Systems (Sunnyvale, CA), recipient of the Frost & Sullivan 2015 Global Light Detection and Ranging Entrepreneurial Company of the Year Award, says no—it can do even better.

Quanergy plans to debut a credit-card-sized $250 lidar system at the Consumer Electronics Show in January 2016.8 This 300-m-range, centimeter-accurate, 360°, and all-weather-capable lidar is geared towards vehicle safety and autonomous driving. "With our mechanical lidar, we broke the $1000 barrier, and with our solid-state lidar, we will break the $100 barrier," says Louay Eldada, Quanergy co-founder and CEO. While Quanergy cannot yet comment on the details of its solid-state design, it plans to discreetly incorporate the technology into autonomous automobiles without affecting aesthetic design (see Fig. 5).Also small (2 in3) is the anticipated 2016 debut of a below-$100 lidar module from Phantom Intelligence (Quebec, QC, Canada). In a close analog to a 3D camera, the flash-lidar-based technology (with no moving parts) uses a single diffused laser beam to illuminate the scene and multiple receiver elements to reconstruct the image in a 30° × 8° swath at a range of up to 30 m.

Laser diodes from Osram Opto Semiconductors (Regensburg, Germany) power the Phantom Intelligence system, operating at 905 nm with 70 W peak power, 10 kHz frequency, and 40 ns pulse width. Packaged laser diode modules have an integrated driver and bare chips can achieve > 70 W depending on drive current. Osram is working with key component suppliers to improve their lidar-specific laser diodes whether through improved integration with microelectromechanical systems (MEMS) components or improved optical designs to reduce coupling losses. A reliable lidar source is then complemented by digital signal processing that maximizes the detection range of the lidar system, eliminates false detection events, and allows improved detection in inclement conditions.

Phantom Intelligence acknowledges that many applications do not need very fine angular resolution, and that multiple sensors could be strategically placed on a vehicle to provide 360° coverage, for example. "The density of information has a strong impact, not only on sensor cost, but also on the cost of the required back-end processing hardware," says Phantom Intelligence president Jean-Yves Deschênes. "We feel that our clients should be able to select the resolution, range, and field of view they need to tackle their application objectives and respect their cost constraints."

Deschênes expects that integration techniques and resolution performance of these miniature solid-state lidar systems will continue to progress such that capabilities will eventually be on par with high-end scanned-lidar systems at a fraction of the cost. "One person dies on the road every 25 seconds and autonomous vehicles have proven to be safer. That's just one example of how important it is for photonics professionals to ensure that low-cost, miniature lidar systems succeed in the marketplace."

REFERENCES

1. A. Yaacobi et al., Opt. Lett., 39, 15, 4575–4578 (Aug. 2014).

2. A. Y. Liu et al., Appl. Phys. Lett., 104, 4, 041104 (2014).

3. F. Aflatouni et al., Opt. Express, 23, 4, 5117–5125 (Feb. 2015).

4. See http://bit.ly/1ypyObF.

5. See http://bit.ly/1Ni6xyW.

6. See http://bit.ly/1LXkyR1.

7. See http://bit.ly/1FxJ3iU.

8. See http://on.wsj.com/1HUaZBz.

About the Author

Gail Overton

Senior Editor (2004-2020)

Gail has more than 30 years of engineering, marketing, product management, and editorial experience in the photonics and optical communications industry. Before joining the staff at Laser Focus World in 2004, she held many product management and product marketing roles in the fiber-optics industry, most notably at Hughes (El Segundo, CA), GTE Labs (Waltham, MA), Corning (Corning, NY), Photon Kinetics (Beaverton, OR), and Newport Corporation (Irvine, CA). During her marketing career, Gail published articles in WDM Solutions and Sensors magazine and traveled internationally to conduct product and sales training. Gail received her BS degree in physics, with an emphasis in optics, from San Diego State University in San Diego, CA in May 1986.