A pair of breakthroughs brought high capacity to today’s fiber-optic systems-single-mode transmission at 1300 nm in the 1980s, and the combination of erbium-doped fiber amplifiers and dense wavelength-division multiplexing (DWDM) in the 1550 nm band in the 1990s. But since the turn of the century the peak data rate on single optical channels in commercial systems has remained stalled at 10 Gbit/s.

Now the technical barriers to transmitting 40 Gbit/s data streams on single channels have fallen. Serious commercial deployment of 40 Gbit/s systems started last year, with AT&T launching coast-to-coast connections and Verizon starting 40 Gbit/s router-to-router transmission on heavily trafficked Internet routes. In November, Verizon reported transmitting live video traffic in a 100 Gbit/s field trial with Alcatel-Lucent equipment on a 504 km route between Miami and Tampa in Florida-the first of its kind-and talked of commercial 100 Gbit/s deployment in a couple of years.

40 Gigabits and beyond

The step from 10 to 40 Gbit/s transmission was a logical one, following the standard factor-of-four increase in backbone data rates in the Optical Transport Network (OTN) standards developed by the International Telecommunications Union. A number of problems slowed adoption of the technology. Static dispersion management was needed to control the factor-of-16 increase in dispersion sensitivity arising from the fourfold decrease in pulse length and the fourfold increase in spectral bandwidth. Polarization-mode dispersion also required management. Multiplying the bit rate by four required a 6 dB increase in optical signal-to-noise ratio. And costs had to be controlled.

Using phase modulation rather than the intensity modulation used at lower data rates has also helped, says Glenn Wellbrock, director of backbone network design for Verizon. Its system uses differential phase-shift keying (DPSK) to code binary data as a phase shift between successive bits of 0 or π radians. This helps fit a 40 Gbit/s signal into a 50 GHz DWDM channel slot, so Verizon could drop its 40 Gbit/s lines into open channels in fibers already carrying many 10 Gbit/s signals. Most other 40 Gbit/s systems use formats that fit into 50 GHz channels.

Many developers have tested 80 Gbit/s transmission, and that rate can be used for optical interconnects, but the next standardized bit rate will likely be 100 Gbit/s. ITU’s Telecommunications Standards group is developing a new OTN standard for backbone transmission at that rate, and the IEEE P802.3ba Higher Speed Ethernet Task Force is working on 40 and 100 Gbit/s standards for local transport. In practice, the line rates include error correction and overhead bits, so they will be about 107 Gbit/s for Ethernet and somewhat higher for OTN, which adds additional overhead to manage transmission.

Developers have demonstrated 100 Gbit/s transmission using a number of techniques for electronic time-division multiplexing (ETDM) of input data streams. Laboratory experiments have transported data at bit rates of 640 Gbit/s and beyond, but only by using elaborate optical time-division multiplexing (OTDM) techniques that optically interleave streams of specially shortened pulses generated at lower repetition rates.1 Modulating the pulses with live data is a challenge, and optically demultiplexing requires complex and expensive hardware, so practical development is focusing on ETDM for 100 Gbit/s.

Challenges at 100 Gbit/s

Binary ETDM has been demonstrated in the laboratory at 80 to 107 Gbit/s, but that speed poses serious technology challenges for transmitters, receivers, and fiber dispersion management.

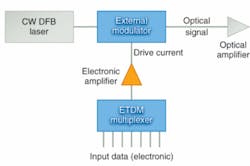

The transmitter limitations come from both the electronic drive circuits and the external modulator that generates the optical signal. The input signals are electrically multiplexed and amplified to several volts to drive the modulator (see Fig. 1). Silicon germanium circuits have reached rates of 132 Gbit/s, but packaging has limited device bandwidths to 107 Gbit/s, write Eugen Lach and Karsten Schuh of Alcatel-Lucent Bell Labs Germany (Stuttgart, Germany) in a review paper.2 That limit affects both the electronic multiplexing and the amplification stages of the modulator driver.

Modulator challenges are achieving fast response and high contrast ratios at low drive voltage. Drive voltage is a problem for conventional lithium niobate Mach-Zehnder electro-optic modulators, but electro-absorption modulators have modulation coefficients reaching 20 dB/V.3 Polymer Mach-Zehnder electro-optic modulators promise high speed or low voltage, but have yet to combine the two (see www.laserfocusworld.com/articles/317056).

Integrated receivers have been demonstrated at data rates to 107 Gbit/s, but the technology remains challenging.4 And at such high bit rates dynamic dispersion compensation is needed to control for the variation of chromatic dispersion with temperature, even in buried cables, as well as to control polarization-mode dispersion. According to Lach and Schuh, the limitations of binary transmission make an 80 Gbit/s binary system more vulnerable to dispersion than would be expected from simply doubling the data rate.High-bit-rate experiments

A number of 100 Gbit/s ETDM experiments were reported at the post deadline sessions of last year’s Optical Fiber Communications Conference and the European Conference on Optical Communications and more are on the agenda of this month’s OFC 2008 (San Diego, CA). Some rely on binary coding; others on multilevel modulation.

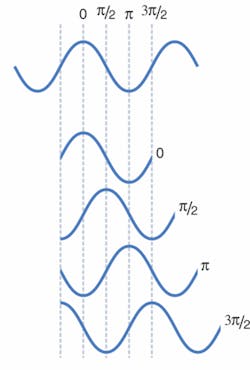

Binary coding has a long and successful track record, and is relatively simple to implement in transmitters and receivers because signals have only two levels. Sophisticated binary formats such as DPSK or duobinary modulation reduce the required bandwidth, simplifying logistics and reducing dispersion effects.5 However, binary modulation requires one symbol per bit, putting heavy demands on bandwidth. Laboratory experiments have demonstrated 107 Gbit/s transmission.6 Yet those results are pushing limits, and Lach and Schuh conclude that “a binary 160 Gbit/s ETDM system is currently out of reach with existing electronic and optoelectronic technologies.”Multilevel modulation can circumvent that problem. The best-developed multilevel modulation scheme for high-speed fiber optics is DQPSK-differential quadrature phase shift keying. Instead of coding bit intervals as 1 or 0, DQPSK codes a phase shift of 0, +π/2, +π, or 3π/2 radians-four possible values corresponding to two bits (see Fig. 2). Using DQPSK allows electronics that are bandwidth-limited to a 50 gigabaud symbol rate to transmit 100 Gbit/s, twice the bit rate of a binary code, at the cost of more-complex transmitters and receivers. Adding polarization modulation would give eight values corresponding to three bits, but may require coherent detection.

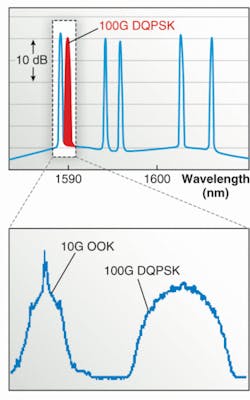

The 100 Gbit/s Verizon field trial used DQPSK to more than double the design bit rate of a 40 Gbit/s Alcatel-Lucent 1625 LambdaXtreme Transport system, Peter Winzer of Alcatel-Lucent Bell Labs and colleagues will report this month at OFC.7 The signals could be transmitted on a 50 GHz grid. Fig. 3 compares the 100 Gbit/s signal with 10 Gbit/s signals transmitted through the same fiber at other wavelengths. Differential quadrature phase-shift keying is used commercially in some applications, and has been used to squeeze huge data rates through fibers in a series of hero experiments.

Outlook

Going from binary modulation to a more sophisticated multilevel modulation scheme is a paradigm shift comparable to that caused by the erbium-fiber amplifier, says Peter Andrekson of Chalmers University (Göteborg, Sweden). Multilevel modulation improves spectral efficiency and dispersion tolerance, and relaxes optical and electronic bandwidth requirements. Coherent detection is not required for all multilevel modulation, but it may offer important benefits, and thanks to the advances in electronics, Andrekson says, coherent modulation will be much easier to implement than it was in the 1980s.

Market needs and technology capabilities will shape the course of high-bit-rate transmission. Verizon expects 40 Gbit/s systems to reduce latency and jitter, improving system performance as well as increasing capacity, and expects to see similar benefits at higher speeds. Standards writers have already penciled in 100 Gbit/s as the next logical step up in data rate both for Ethernet networks and long-haul backbone transmission. The two applications differ in detail and are at different layers of the telecommunications network, but it is important that Ethernet packets can be smoothly packaged into the signals transmitted between routers on backbone networks. “We feel there’s a place for both 40 and 100 Gbit/s,” says Wellbrock of Verizon. He adds that coherent detection may be added to improve performance of long-haul 100 Gbit/s systems when they are deployed in 2010 or so. In the long term, it will all come down to cost and performance.

REFERENCES

1. A.I. Siahlo et al., CLEO 2005, paper CTuO1.

2. E. Lach and K. Schuh, J. Lightwave Tech. 24, 4455 (December 2006).

3. Y. Yu et al., OFC 2005, paper OWE1.

4. C. Schubert et al., J. Lightwave Tech. 25, 122 (January 2007).

5. T.J. Xia et al., paper to be presented at OFC 2008.

6. P.J. Winzer and R.-J. Essiambre, Proc. IEEE 94,952 (May 2006).

7. P.J. Winzer, G. Raybon, and C.R. Doerr, Paper Tu1.5.1, Proc. European Conf. on Optical Communications (ECOC’06), Cannes (France), (2006).