MIT polarization algorithms increase 3D imaging resolution 1000X

Massachusetts Institute of Technology (MIT; Cambridge, MA) researchers have shown that by exploiting the polarization of light, they can increase the resolution of conventional 3D imaging devices as much as 1000 times. The technique could lead to high-quality 3D cameras built into cell phones, and perhaps to the ability to snap a photo of an object and then use a 3D printer to produce a replica. Further out, the work could also assist the development of driverless cars.

RELATED ARTICLE: Vision-correcting display lets users see clearly without eyeglasses

The researchers describe the new system, which they call Polarized 3D, in a paper they're presenting at the International Conference on Computer Vision in December.

Polarization affects the way in which light bounces off of physical objects. If light strikes an object squarely, much of it will be absorbed, but whatever reflects back will have the same mix of polarizations that the incoming light did. At wider angles of reflection, however, light within a certain range of polarizations is more likely to be reflected.

This is why polarized sunglasses are good at cutting out glare: Light from the sun bouncing off asphalt or water at a low angle features an unusually heavy concentration of light with a particular polarization. So the polarization of reflected light carries information about the geometry of the objects it has struck.

This relationship has been known for centuries, but it's been hard to do anything with it, because of a fundamental ambiguity about polarized light. Light with a particular polarization, reflecting off of a surface with a particular orientation and passing through a polarizing lens is indistinguishable from light with the opposite polarization, reflecting off of a surface with the opposite orientation.

This means that for any surface in a visual scene, measurements based on polarized light offer two equally plausible hypotheses about its orientation. Canvassing all the possible combinations of either of the two orientations of every surface, in order to identify the one that makes the most sense geometrically, is a prohibitively time-consuming computation.

To resolve this ambiguity, the Media Lab researchers use coarse depth estimates provided by some other method, such as the time a light signal takes to reflect off of an object and return to its source. Even with this added information, calculating surface orientation from measurements of polarized light is complicated, but it can be done in real-time by a graphics processing unit, the type of special-purpose graphics chip found in most video game consoles.

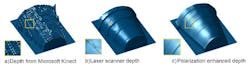

The researchers' experimental setup consisted of a Microsoft Kinect—which gauges depth using reflection time—with an ordinary polarizing photographic lens placed in front of its camera. In each experiment, the researchers took three photos of an object, rotating the polarizing filter each time, and their algorithms compared the light intensities of the resulting images.

On its own, at a distance of several meters, the Kinect can resolve physical features as small as a centimeter or so across. But with the addition of the polarization information, the researchers' system could resolve features in the range of tens of micrometers, or one-thousandth the size.

For comparison, the researchers also imaged several of their test objects with a high-precision laser scanner, which requires that the object be inserted into the scanner bed. Polarized 3D still offered the higher resolution.

A mechanically rotated polarization filter would probably be impractical in a cellphone camera, but grids of tiny polarization filters that can overlay individual pixels in a light sensor are commercially available. The new paper also offers the tantalizing prospect that polarization systems could aid the development of self-driving cars. Today's experimental self-driving cars are, in fact, highly reliable under normal illumination conditions, but their vision algorithms go haywire in rain, snow, or fog. That’s because water particles in the air scatter light in unpredictable ways, making it much harder to interpret.

The MIT researchers show that in some very simple test cases—which have nonetheless bedeviled conventional computer vision algorithms—their system can exploit information contained in interfering waves of light to handle scattering.

"The work fuses two 3-D sensing principles, each having pros and cons," says Yoav Schechner, an associate professor of electrical engineering at Technion - Israel Institute of Technology in Haifa, Israel. "One principle provides the range for each scene pixel: This is the state of the art of most 3-D imaging systems. The second principle does not provide range. On the other hand, it derives the object slope, locally. In other words, per scene pixel, it tells how flat or oblique the object is." Schechner explains, "Because this approach practically overcomes ambiguities in polarization-based shape sensing, it can lead to wider adoption of polarization in the toolkit of machine-vision engineers."

SOURCE: MIT; http://news.mit.edu/2015/algorithms-boost-3-d-imaging-resolution-1000-times-1201

About the Author

Gail Overton

Senior Editor (2004-2020)

Gail has more than 30 years of engineering, marketing, product management, and editorial experience in the photonics and optical communications industry. Before joining the staff at Laser Focus World in 2004, she held many product management and product marketing roles in the fiber-optics industry, most notably at Hughes (El Segundo, CA), GTE Labs (Waltham, MA), Corning (Corning, NY), Photon Kinetics (Beaverton, OR), and Newport Corporation (Irvine, CA). During her marketing career, Gail published articles in WDM Solutions and Sensors magazine and traveled internationally to conduct product and sales training. Gail received her BS degree in physics, with an emphasis in optics, from San Diego State University in San Diego, CA in May 1986.