ROBOTS AND VISION: Super-resolution boosts flash-ladar accuracy

Mobile robots have to see somehow. For applications such as urban warfare, where many obstacles exist at differing heights and yet a robot has to have at least some autonomy, flash ladar (laser detection and ranging) is turning out to be one of the most-promising approaches to vision. Flash ladar relies on pulses of diffuse near-infrared light to provide 3D information via time-of-flight and an imaging sensor (intensity information can be collected also if desired).

But flash ladar devices use focal-plane arrays, which are typically limited to something on the order of 256 × 256 pixels—a resolution that needs to be improved for practical use. One way to do this is to use "super-resolution," a process in which multiple images are taken with sub-pixel-sized shifts between them, and the resulting data processed to create a single super-resolved image.

Researchers at the Army Research Laboratory (Adelphi, MD), the National Institute of Standards and Technology (Gaithersburg, MD), and the Night Vision and Electronic Standards Directorate (Fort Belvoir, VA) experimentally evaluated the effectiveness of this technique, finding that it substantially improves target discrimination.1

Handheld camera

The experimental system was based on a bank of 55 LEDs emitting pulses at 850 nm and 20 MHz for illumination, and a detector with 176 × 144 pixels and a lens with an f number of 1.4. The per-pixel sample size was larger than the optics diffraction spot.

Successful super-resolution requires a number of images taken with pixel shifts that are not an integral multiple of the pixel size; in the experiment, the sub-pixel shifts between frames were acquired as a result of natural motions of the camera, which was handheld. The camera was assumed to translate in x and y, but not to rotate significantly. Only range data (not intensity) were used in the study.

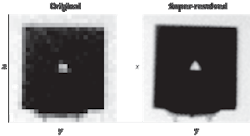

A square board with a 7.5 cm equilateral triangle cut into its center was used as the object; the triangle size was selected to be close to or just beyond the resolution limit (see figure). Experiments were done at distances of 3, 3.5, 4, 4.5, 5, 5.5, and 6 m (all of which are less than the ambiguity range that results from the 20 MHz pulse repetition rate).

The triangle was oriented in one of four ways: apex up, down, right, or left, following a previously developed experimental approach called triangle-orientation discrimination (TOD).2 The ultimate sensor in TOD is a human, who in the study observed the resulting flash-ladar image and attempted to discern the orientation of the triangle.

The integration time for the flash-ladar camera was set so that the camera received enough light but did not saturate, with integration times falling somewhere between 15 and 25 ms, depending on the range. Each captured image in a frame series was preprocessed to emphasize edges; the super-resolution algorithm was then applied to the series, using the preprocessed image data only to determine the amount of sub-pixel shift for each image. The unprocessed images were used for the rest of the algorithm.

More accurate, and faster too

While the correct TOD rate for single images captured by flash ladar varied from 73% at a 3 m distance to 25% at a 6 m distance, the TOD rate for the super-resolved images were 100% at most distances, dropping to 95% at 6 m. In addition to spotting the triangle orientations much more accurately in the super-resolved images, the human subjects responded more quickly to them than to the single images: The minimum speedup for all ranges except 6 m was 48% (at 6 m, the triangles were so difficult to discern for the non-super-resolved images that the subjects just tended to quickly choose a random orientation).

The authors believe that the best next step is to incorporate super-resolution flash ladar into mobile robots and compare their performance as they navigate obstacle courses to that of robots with regular flash ladar systems, and also to that of robots that have traditional scanning ladar systems (which scan at very high resolution, but only along a single horizontal line). The ultimate result could be a robot light, small, and nimble enough to negotiate indoor as well as outdoor urban environments.

REFERENCES

- Shuowen Hu et al., Appl. Optics 49, 5, p. 772 (Feb. 10, 2010).

- P. Bijl and J.M. Valeton, Opt. Eng. 37, p. 1976 (1998).

About the Author

John Wallace

Senior Technical Editor (1998-2022)

John Wallace was with Laser Focus World for nearly 25 years, retiring in late June 2022. He obtained a bachelor's degree in mechanical engineering and physics at Rutgers University and a master's in optical engineering at the University of Rochester. Before becoming an editor, John worked as an engineer at RCA, Exxon, Eastman Kodak, and GCA Corporation.